| ID | prof_ID | score | age | bty_avg | gender | ethnicity | language | rank | pic_outfit | pic_color | cls_did_eval | cls_students | cls_level |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

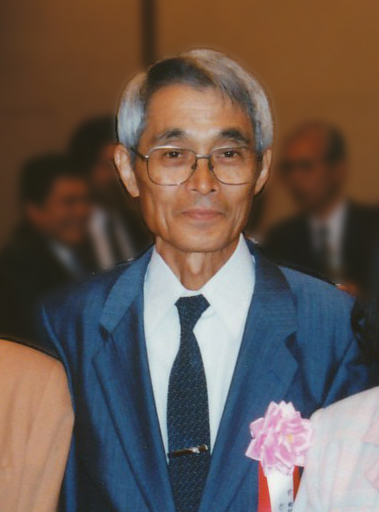

| 240 | 45 | 3.7 | 33 | 7.000 | male | not minority | english | tenure track | formal | color | 13 | 15 | upper |

| 260 | 49 | 4.2 | 52 | 3.167 | male | not minority | english | tenured | not formal | color | 78 | 98 | upper |

| 294 | 56 | 4.4 | 32 | 3.833 | male | not minority | english | tenure track | formal | black&white | 20 | 22 | upper |

Variable Selection in Multiple Regression

Reminders About Deadlines

Revision Deadlines

Lab 3 revisions are due on Wednesday (May 7)

Statistical Critique revisions are due Wednesday (May 7)

Lab 4 revisions are due on Friday (May 9)

Lab 3 Revisions

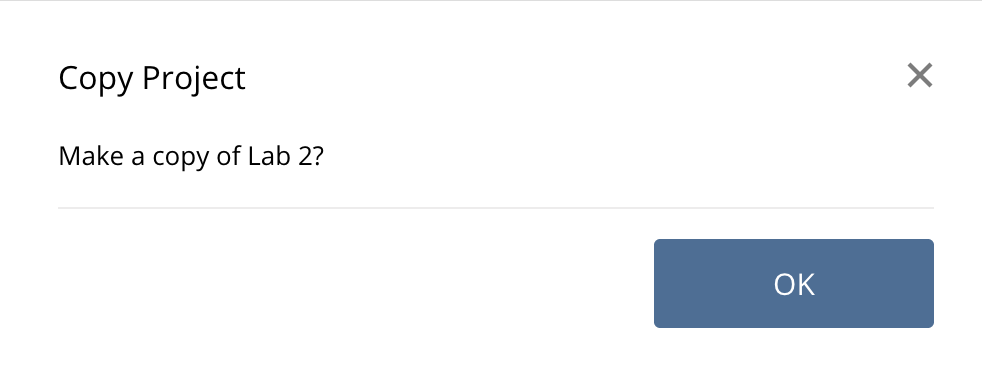

- Log in to Posit Cloud

- Open your Weeks 2-3 Group Workspace

- Find Lab 3

- Make a personal copy of your group’s Lab 3

Every member must have their own copy of the lab! No one works in the original document.

Lab 4

- Question 8: Write out the estimated regression equation

- Your equation needs to indicate the explanatory and response variables (not x and y).

- Your equation needs to indicate that the response is estimated, not an exact value.

- Question 9: Interpret the slope coefficient

- If you increase

yearby 1, how much do you expect theice durationto change?

- If you increase

- Question 10: A different slope interpretation

- If you increase

yearby 100, how much do you expect theice durationto change?

- If you increase

Model Selection

Model Selection

What is model selection?

Why use model selection?

1. Lots of available predictor variables

evals:

2. Interested in prediction not explanation

You want to predict an outcome variable \(y\) based on the information contained in a set of predictor variables \(x\). You don’t care so much about understanding how all the variables relate and interact with one another, but rather only whether you can make good predictions about \(y\) using the information in \(x\).

ModernDive

How do you use model selection?

- Stepwise Selection

- Forward Selection

- Backward Selection

- Resampling Methods

- Cross Validation

- Testing / Training Datasets

With any of these methods, you get to choose how you decide if one model is better than another model.

Model Comparison Measures

\(R^2\) – Coefficient of Determination

Wright, Sewall (1921). Correlation and Causation. Journal of Agricultural Research 20: 557-585.

In statistics, the coefficient of determination, denoted \(R^2\) or \(r^2\) and pronounced “R squared,” is the proportion of the variation in the dependent variable that is predictable from the independent variable(s).

Wikipedia

\(R^2 = 1 - \frac{\text{var}(\text{residuals})}{\text{var}(y)}\)

\(\text{var}(\text{residuals})\) is the variance of the residuals “leftover” from the regression model

\(\text{var}(y)\) is the inherent variability of the response variable

Suppose we have a simple linear regression with an \(R^2\) of 0.85. How would you interpret this quantity?

Wait!

\(R^2\) always increases as you increase the number of explanatory variables.

The variance of the residuals will always decrease when you include additional explanatory variables.

Simple Linear Regression

\(0.85 = 1 - \frac{0.75}{5}\)

One Additional Variable

\(0.86 = 1 - \frac{0.7}{5}\)

Adjusted \(R^2\)

Mordecai Ezekiel (1930). Methods Of Correlation Analysis, Wiley, p. 208-211.

The use of an adjusted \(R^2\) is an attempt to account for the phenomenon of the \(R^2\) automatically increasing when extra explanatory variables are added to the model.

Wikipedia

\(R^2_{adj} = 1 - R^2 \times \frac{(n - 1)}{(n - k - 1)}\)

\(n\) is the sample size

\(k\) is the number of coefficients needed to be calculated

Suppose you have a categorical variable with 4 levels included in your parallel slopes multiple linear regression.

What value will you use for \(k\) in the calculation of \(n - k - 1\)?

p-values

Fisher R. A. (1950). Statistical Methods for Research Workers.

In null-hypothesis significance testing, the p-value is the probability of obtaining test results at least as extreme as the result actually observed, under the assumption that the null hypothesis is correct. A very small p-value means that such an extreme observed outcome would be very unlikely under the null hypothesis.

Wikipedia

AIC

Akaike, H. (1973). Information theory and an extension of the maximum likelihood principle.

The Akaike information criterion (AIC) is an estimator of prediction error and thereby relative quality of statistical models for a given set of data. Given a collection of models for the data, AIC estimates the quality of each model, relative to each of the other models.

Wikipedia

How do you use AIC to choose a “best” model?

| model | AIC | Delta AIC |

|---|---|---|

| Full Model | 4724.970 | 0.000000 |

| All Variables Except Year | 4727.242 | 2.272501 |

| All Variables Except Flipper Length | 4757.214 | 32.244605 |

| All Variables Except Species | 4793.681 | 68.710933 |

If you’ve ever assessed whether \(\Delta\) AIC \(> 2\) you have done something that is mathematically close to \(p < 0.05\).

Model Selection Activity!

Backward Selection by Hand

- Start with “full” model (every explanatory variable is included)

- Use adjusted \(R^2\) to summarize the “fit” of this model

- Decide which one variable to remove

- Highest adjusted \(R^2\)

- Decide what one variable to remove next

- Highest adjusted \(R^2\)

- Keep removing variables until adjusted \(R^2\) doesn’t increase

What’s your best model?

Adding a Constraint

Repeat the same process, but now for a variable to be removed the adjusted \(R^2\) must increase by at least 2% (0.02).

What’s your best model?

If you’re not interested in prediction, what should you use instead?

In fact, many statisticians discourage the use of stepwise regression alone for model selection and advocate, instead, for a more thoughtful approach that carefully considers the research focus and features of the data.

Introduction to Modern Statistics

For Wednesday

Peer Review

Please print your Midterm Project and bring it to class!