seuss_dir = "http://dlsun.github.io/pods/data/drseuss/"

seuss_files = [

"green_eggs_and_ham.txt",

"cat_in_the_hat.txt",

"fox_in_socks.txt",

"how_the_grinch_stole_christmas.txt",

"hop_on_pop.txt",

"horton_hears_a_who.txt",

"oh_the_places_youll_go.txt",

"one_fish_two_fish.txt"]Bag-of-Words and TF-IDF

Exam 1

What to expect

Content

- Everything covered through the end of this week

- Visualizing numerical & categorical variables

- Summarizing numerical & categorical variables

- Distances between observations

- Preprocessing (scaling / one-hot-encoding) variables

Structure

- 80-minutes

- First part of class (12:10 - 1:30am; 3:10 - 4:30pm)

- Google Collab Notebook

- Completed on the Desktop computer in front of you

- Resources you can use:

- Your own Collab notebooks

- Any course materials

- Official Python documentation

- Resources you can not use:

- Generative AI for anything beyond text completion

- Google for anything except to reach Python documentation pages

Data for Exam 1

- Dataset on coffee attributes

- Derived from a Kaggle dataset (link to documentation)

- Raw data can be downloaded here (link to data)

The story so far…

Preprocessing

For quantitative variables:

Standardize: Subtract the mean (of the column) and divide by the standard deviation (of the column).

MinMax: Subtract the minimum value, divide by the range.

For categorical variables:

- OneHotEncode: Create dummy variables (columns of 0s and 1s) for every level of a categorical variables.

Use make_column_transformer() to have multiple preprocessing steps.

Distances

We measure similarity between observations by calculating distances.

Euclidean distance: sum of squared differences, then square root

Manhattan distance: sum of absolute differences

In scikit-learn, use the

pairwise_distances()function to get back a 2D numpy array of distances.

But what if our data are text instead of categories?

Bag of Words

Text Data

A textual data set consists of multiple texts. Each text is called a document. The collection of texts is called a corpus.

Example Corpus:

"I am Sam\n\nI am Sam\nSam I...""The sun did not shine.\nIt was...""Fox\nSocks\nBox\nKnox\n\nKnox...""Every Who\nDown in Whoville\n...""UP PUP Pup is up.\nCUP PUP...""On the fifteenth of May, in the...""Congratulations!\nToday is your...""One fish, two fish, red fish..."

Reading Text Data

Documents are usually stored in different files.

…so

…we have to read them in one by one

Text Data

dict_values(['I am Sam\n\nI am Sam\nSam I am\n\nThat Sam-I-am\nThat Sam-I-am!\nI do not like\nthat Sam-I-am\n\nDo you like\ngreen eggs and ham\n\nI do not like them,\nSam-I-am.\nI do not like\ngreen eggs and ham.\n\nWould you like them\nHere or there?\n\nI would not like them\nhere or there.\nI would not like them\nanywhere.\nI do not like\ngreen eggs and ham.\nI do not like them,\nSam-I-am\n\nWould you like them\nin a house?\nWould you like them\nwith a mouse?\n\nI do not like them\nin a house.\nI do not like them\nwith a mouse.\nI do not like them\nhere or there.\nI do not like them\nanywhere.\nI do not like green eggs and ham.\nI do not like them, Sam-I-am.\n\n\nWould you eat them\nin a box?\nWould you eat them\nwith a fox?\n\nNot in a box.\nNot with a fox.\nNot in a house.\nNot with a mouse.\nI would not eat them here or there.\nI would not eat them anywhere.\nI would not eat green eggs and ham.\nI do not like them, Sam-I-am.\n\nWould you? Could you?\nin a car?\nEat them! Eat them!\nHere they are.\n\nI would not,\ncould not,\nin a car.\n\nYou may like them.\nYou will see.\nYou may like them\nin a tree?\n\nI would not, could not in a tree.\nNot in a car! You let me be.\n\nI do not like them in a box.\nI do not like them with a fox\nI do not like them in a house\nI do mot like them with a mouse\nI do not like them here or there.\nI do not like them anywhere.\nI do not like green eggs and ham.\nI do not like them, Sam-I-am.\n\nA train! A train!\nA train! A train!\nCould you, would you\non a train?\n\nNot on a train! Not in a tree!\nNot in a car! Sam! Let me be!\nI would not, could not, in a box.\nI could not, would not, with a fox.\nI will not eat them with a mouse\nI will not eat them in a house.\nI will not eat them here or there.\nI will not eat them anywhere.\nI do not like them, Sam-I-am.\n\n\nSay!\nIn the dark?\nHere in the dark!\nWould you, could you, in the dark?\n\nI would not, could not,\nin the dark.\n\nWould you, could you,\nin the rain?\n\nI would not, could not, in the rain.\nNot in the dark. Not on a train,\nNot in a car, Not in a tree.\nI do not like them, Sam, you see.\nNot in a house. Not in a box.\nNot with a mouse. Not with a fox.\nI will not eat them here or there.\nI do not like them anywhere!\n\nYou do not like\ngreen eggs and ham?\n\nI do not\nlike them,\nSam-I-am.\n\nCould you, would you,\nwith a goat?\n\nI would not,\ncould not.\nwith a goat!\n\nWould you, could you,\non a boat?\n\nI could not, would not, on a boat.\nI will not, will not, with a goat.\nI will not eat them in the rain.\nI will not eat them on a train.\nNot in the dark! Not in a tree!\nNot in a car! You let me be!\nI do not like them in a box.\nI do not like them with a fox.\nI will not eat them in a house.\nI do not like them with a mouse.\nI do not like them here or there.\nI do not like them ANYWHERE!\n\nI do not like\ngreen eggs\nand ham!\n\nI do not like them,\nSam-I-am.\n\nYou do not like them.\nSO you say.\nTry them! Try them!\nAnd you may.\nTry them and you may I say.\n\nSam!\nIf you will let me be,\nI will try them.\nYou will see.\n\nSay!\nI like green eggs and ham!\nI do! I like them, Sam-I-am!\nAnd I would eat them in a boat!\nAnd I would eat them with a goat...\nAnd I will eat them in the rain.\nAnd in the dark. And on a train.\nAnd in a car. And in a tree.\nThey are so good so good you see!\n\nSo I will eat them in a box.\nAnd I will eat them with a fox.\nAnd I will eat them in a house.\nAnd I will eat them with a mouse.\nAnd I will eat them here and there.\nSay! I will eat them ANYWHERE!\n\nI do so like\ngreen eggs and ham!\nThank you!\nThank you,\nSam-I-am\n', 'The sun did not shine.\nIt was too wet to play.\nSo we sat in the house\nAll that cold, cold, wet day.\n\nI sat there with Sally.\nWe sat there, we two.\nAnd I said, "How I wish\nWe had something to do!"\n\nToo wet to go out\nAnd too cold to play ball.\nSo we sat in the house.\nWe did nothing at all.\n\nSo all we could do was to\n\nSit!\nSit!\nSit!\nSit!\n\nAnd we did not like it.\nNot one little bit.\n\nBUMP!\n\nAnd then\nsomething went BUMP!\nHow that bump made us jump!\n\nWe looked!\nThen we saw him step in on the mat!\nWe looked!\nAnd we saw him!\nThe Cat in the Hat!\nAnd he said to us,\n"Why do you sit there like that?"\n"I know it is wet\nAnd the sun is not sunny.\nBut we can have\nLots of good fun that is funny!"\n\n"I know some good games we could play,"\nSaid the cat.\n"I know some new tricks,"\nSaid the Cat in the Hat.\n"A lot of good tricks.\nI will show them to you.\nYour mother\nWill not mind at all if I do."\n\nThen Sally and I\nDid not know what to say.\nOur mother was out of the house\nFor the day.\n\nBut our fish said, "No! No!\nMake that cat go away!\nTell that Cat in the Hat\nYou do NOT want to play.\nHe should not be here.\nHe should not be about.\nHe should not be here\nWhen your mother is out!"\n\n"Now! Now! Have no fear.\nHave no fear!" said the cat.\n"My tricks are not bad,"\nSaid the Cat in the Hat.\n"Why, we can have\nLots of good fun, if you wish,\nwith a game that I call\nUP-UP-UP with a fish!"\n\n"Put me down!" said the fish.\n"This is no fun at all!\nPut me down!" said the fish.\n"I do NOT wish to fall!"\n\n"Have no fear!" said the cat.\n"I will not let you fall.\nI will hold you up high\nAs I stand on a ball.\nWith a book on one hand!\nAnd a cup on my hat!\nBut that is not ALL I can do!"\nSaid the cat...\n\n"Look at me!\nLook at me now!" said the cat.\n"With a cup and a cake\nOn the top of my hat!\nI can hold up TWO books!\nI can hold up the fish!\nAnd a litte toy ship!\nAnd some milk on a dish!\nAnd look!\nI can hop up and down on the ball!\nBut that is not all!\nOh, no.\nThat is not all...\n\n"Look at me!\nLook at me!\nLook at me NOW!\nIt is fun to have fun\nBut you have to know how.\nI can hold up the cup\nAnd the milk and the cake!\nI can hold up these books!\nAnd the fish on a rake!\nI can hold the toy ship\nAnd a little toy man!\nAnd look! With my tail\nI can hold a red fan!\nI can fan with the fan\nAs I hop on the ball!\nBut that is not all.\nOh, no.\nThat is not all...."\n\nThat is what the cat said...\nThen he fell on his head!\nHe came down with a bump\nFrom up there on the ball.\nAnd Sally and I,\nWe saw ALL the things fall!\n\nAnd our fish came down, too.\nHe fell into a pot!\nHe said, "Do I like this?"\nOh, no! I do not.\nThis is not a good game,"\nSaid our fish as he lit.\n"No, I do not like it,\nNot one little bit!"\n\n"Now look what you did!"\nSaid the fish to the cat.\n"Now look at this house!\nLook at this! Look at that!\nYou sank our toy ship,\nSank it deep in the cake.\nYou shook up our house\nAnd you bent our new rake.\nYou SHOULD NOT be here\nWhen our mother is not.\nYou get out of this house!"\nSaid the fish in the pot.\n\n"But I like to be here.\nOh, I like it a lot!"\nSaid the Cat in the Hat\nTo the fish in the pot.\n"I will NOT go away.\nI do NOT wish to go!\nAnd so," said the Cat in the Hat,\n\n"So\nso\nso...\n\nI will show you\nAnother good game that I know!"\nAnd then he ran out.\nAnd, then, fast as a fox,\nThe Cat in the Hat\nCame back in with a box.\nA big red wood box.\nIt was shut with a hook.\n"Now look at this trick,"\nSaid the cat.\n"Take a look!"\n\nThen he got up on top\nWith a tip of his hat.\n"I call this game FUN-IN-A-BOX,"\nSaid the cat.\n"In this box are two things\nI will show to you now.\nYou will like these two things,"\nSaid the cat with a bow.\n\n"I will pick up the hook.\nYou will see something new.\nTwo things. And I call them\nThing One and Thing Two.\nThese Things will not bite you.\nThey want to have fun."\nThen, out of the box\nCame Thing Two and Thing One!\nAnd they ran to us fast.\nThey said, "How do you do?\nWould you like to shake hands\nWith Thing One and Thing Two?"\n\nAnd Sally and I\nDid not know what to do.\nSo we had to shake hands\nWith Thing One and Thing Two.\nWe shook their two hands.\nBut our fish said, "No! No!\nThose Things should not be\nIn this house! Make them go!\n"They should not be here\nWhen your mother is not!\nPut them out! Put them out!"\nSaid the fish in the pot.\n\n"Have no fear, little fish,"\nSaid the Cat in the Hat.\n"These Things are good Things."\nAnd he gave them a pat.\n"They are tame. Oh, so tame!\nThey have come here to play.\nThey will give you some fun\nOn this wet, wet, wet day."\n\n"Now, here is a game that they like,"\nSaid the cat.\n"They like to fly kites,"\nSaid the Cat in the Hat\n\n"No! Not in the house!"\nSaid the fish in the pot.\n"They should not fly kites\nIn a house! They should not.\nOh, the things they will bump!\nOh, the things they will hit!\nOh, I do not like it!\nNot one little bit!" Then Sally and I\nSaw them run down the hall.\nWe saw those two Things\nBump their kites on the wall!\nBump! Thump! Thump! Bump!\nDown the wall in the hall.\n\nThing Two and Thing One!\nThey ran up! They ran down!\nOn the string of one kite\nWe saw Mother\'s new gown!\nHer gown with the dots\nThat are pink, white and red.\nThen we saw one kite bump\nOn the head of her bed!\n\nThen those Things ran about\nWith big bumps, jumps and kicks\nAnd with hops and big thumps\nAnd all kinds of bad tricks.\nAnd I said,\n"I do NOT like the way that they play\nIf Mother could see this,\nOh, what would she say!"\n\nThen our fish said, "Look! Look!"\nAnd our fish shook with fear.\n"Your mother is on her way home!\nDo you hear?\nOh, what will she do to us?\nWhat will she say?\nOh, she will not like it\nTo find us this way!"\n\n"So, DO something! Fast!" said the fish.\n"Do you hear!\nI saw her. Your mother!\nYour mother is near!\nSo, as fast as you can,\nThink of something to do!\nYou will have to get rid of\nThing One and Thing Two!"\n\nSo, as fast as I could,\nI went after my net.\nAnd I said, "With my net\nI can get them I bet.\nI bet, with my net,\nI can get those Things yet!"\n\nThen I let down my net.\nIt came down with a PLOP!\nAnd I had them! At last!\nThoe two Things had to stop.\nThen I said to the cat,\n"Now you do as I say.\nYou pack up those Things\nAnd you take them away!"\n\n"Oh dear!" said the cat,\n"You did not like our game...\nOh dear.\n\nWhat a shame!\nWhat a shame!\nWhat a shame!"\n\nThen he shut up the Things\nIn the box with the hook.\nAnd the cat went away\nWith a sad kind of look.\n\n"That is good," said the fish.\n"He has gone away. Yes.\nBut your mother will come.\nShe will find this big mess!\nAnd this mess is so big\nAnd so deep and so tall,\nWe ca not pick it up.\nThere is no way at all!"\n\nAnd THEN!\nWho was back in the house?\nWhy, the cat!\n"Have no fear of this mess,"\nSaid the Cat in the Hat.\n"I always pick up all my playthings\nAnd so...\nI will show you another\nGood trick that I know!"\n\nThen we saw him pick up\nAll the things that were down.\nHe picked up the cake,\nAnd the rake, and the gown,\nAnd the milk, and the strings,\nAnd the books, and the dish,\nAnd the fan, and the cup,\nAnd the ship, and the fish.\nAnd he put them away.\nThen he said, "That is that."\nAnd then he was gone\nWith a tip of his hat.\n\nThen our mother came in\nAnd she said to us two,\n"Did you have any fun?\nTell me. What did you do?"\n\nAnd Sally and I did not know\nWhat to say.\nShould we tell her\nThe things that went on there that day?\n\nShould we tell her about it?\nNow, what SHOULD we do?\nWell...\nWhat would YOU do\nIf your mother asked YOU?\n', "Fox\nSocks\nBox\nKnox\n\nKnox in box.\nFox in socks.\n\nKnox on fox in socks in box.\n\nSocks on Knox and Knox in box.\n\nFox in socks on box on Knox.\n\nChicks with bricks come.\nChicks with blocks come.\nChicks with bricks and blocks and clocks come.\n\nLook, sir. Look, sir. Mr. Knox, sir.\nLet's do tricks with bricks and blocks, sir.\nLet's do tricks with chicks and clocks, sir.\n\nFirst, I'll make a quick trick brick stack.\nThen I'll make a quick trick block stack.\n\nYou can make a quick trick chick stack.\nYou can make a quick trick clock stack.\n\nAnd here's a new trick, Mr. Knox....\nSocks on chicks and chicks on fox.\nFox on clocks on bricks and blocks.\nBricks and blocks on Knox on box.\n\nNow we come to ticks and tocks, sir.\nTry to say this Mr. Knox, sir....\n\nClocks on fox tick.\nClocks on Knox tock.\nSix sick bricks tick.\nSix sick chicks tock.\n\nPlease, sir. I don't like this trick, sir.\nMy tongue isn't quick or slick, sir.\nI get all those ticks and clocks, sir, \nmixed up with the chicks and tocks, sir.\nI can't do it, Mr. Fox, sir.\n\nI'm so sorry, Mr. Knox, sir.\n\nHere's an easy game to play.\nHere's an easy thing to say....\n\nNew socks.\nTwo socks.\nWhose socks?\nSue's socks.\n\nWho sews whose socks?\nSue sews Sue's socks.\n\nWho sees who sew whose new socks, sir?\nYou see Sue sew Sue's new socks, sir.\n\nThat's not easy, Mr. Fox, sir.\n\nWho comes? ...\nCrow comes.\nSlow Joe Crow comes.\n\nWho sews crow's clothes?\nSue sews crow's clothes.\nSlow Joe Crow sews whose clothes?\nSue's clothes.\n\nSue sews socks of fox in socks now.\n\nSlow Joe Crow sews Knox in box now.\n\nSue sews rose on Slow Joe Crow's clothes.\nFox sews hose on Slow Joe Crow's nose.\n\nHose goes.\nRose grows.\nNose hose goes some.\nCrow's rose grows some.\n\nMr. Fox!\nI hate this game, sir.\nThis game makes my tongue quite lame, sir.\n\nMr. Knox, sir, what a shame, sir.\n\nWe'll find something new to do now.\nHere is lots of new blue goo now.\nNew goo. Blue goo.\nGooey. Gooey.\nBlue goo. New goo.\nGluey. Gluey.\n\nGooey goo for chewy chewing!\nThat's what that Goo-Goose is doing.\nDo you choose to chew goo, too, sir?\nIf, sir, you, sir, choose to chew, sir, \nwith the Goo-Goose, chew, sir.\nDo, sir.\n\nMr. Fox, sir, \nI won't do it. \nI can't say. \nI won't chew it.\n\nVery well, sir.\nStep this way.\nWe'll find another game to play.\n\nBim comes.\nBen comes.\nBim brings Ben broom.\nBen brings Bim broom.\n\nBen bends Bim's broom.\nBim bends Ben's broom.\nBim's bends.\nBen's bends.\nBen's bent broom breaks.\nBim's bent broom breaks.\n\nBen's band. Bim's band.\nBig bands. Pig bands.\n\nBim and Ben lead bands with brooms.\nBen's band bangs and Bim's band booms.\n\nPig band! Boom band!\nBig band! Broom band!\nMy poor mouth can't say that. No, sir.\nMy poor mouth is much too slow, sir.\n\nWell then... bring your mouth this way.\nI'll find it something it can say.\n\nLuke Luck likes lakes.\nLuke's duck likes lakes.\nLuke Luck licks lakes.\nLuck's duck licks lakes.\n\nDuck takes licks in lakes Luke Luck likes.\nLuke Luck takes licks in lakes duck likes.\n\nI can't blab such blibber blubber!\nMy tongue isn't make of rubber.\n\nMr. Knox. Now come now. Come now.\nYou don't have to be so dumb now....\n\nTry to say this, Mr. Knox, please....\n\nThrough three cheese trees three free fleas flew.\nWhile these fleas flew, freezy breeze blew.\nFreezy breeze made these three trees freeze.\nFreezy trees made these trees' cheese freeze.\nThat's what made these three free fleas sneeze.\n\nStop it! Stop it!\nThat's enough, sir.\nI can't say such silly stuff, sir.\n\nVery well, then, Mr. Knox, sir.\n\nLet's have a little talk about tweetle beetles....\n\nWhat do you know about tweetle beetles? Well...\n\nWhen tweetle beetles fight, \nit's called a tweetle beetle battle.\n\nAnd when they battle in a puddle, \nit's a tweetle beetle puddle battle.\n\nAND when tweetle beetles battle with paddles in a puddle, \nthey call it a tweetle beetle puddle paddle battle.\n\nAND...\n\nWhen beetles battle beetles in a puddle paddle battle \nand the beetle battle puddle is a puddle in a bottle...\n...they call this a tweetle beetle bottle puddle paddle battle muddle.\n\nAND...\n\nWhen beetles fight these battles in a bottle with their paddles \nand the bottle's on a poodle and the poodle's eating noodles...\n...they call this a muddle puddle tweetle poodle beetle noodle \nbottle paddle battle.\n\nAND...\n\nNow wait a minute, Mr. Socks Fox!\n\nWhen a fox is in the bottle where the tweetle beetles battle \nwith their paddles in a puddle on a noodle-eating poodle, \nTHIS is what they call...\n\n...a tweetle beetle noodle poodle bottled paddled \nmuddled duddled fuddled wuddled fox in socks, sir!\n\nFox in socks, our game is done, sir.\nThank you for a lot of fun, sir.\n", 'Every Who\nDown in Whoville\nLiked Christmas a lot...\n\nBut the Grinch,\nWho lived just north of Whoville,\nDid NOT!\n\nThe Grinch hated Christmas! The whole Christmas season!\nNow, please don\'t ask why. No one quite knows the reason.\nIt could be his head wasn\'t screwed on just right.\nIt could be, perhaps, that his shoes were too tight.\nBut I think that the most likely reason of all,\nMay have been that his heart was two sizes too small.\n\nWhatever the reason,\nHis heart or his shoes,\nHe stood there on Christmas Eve, hating the Whos,\nStaring down from his cave with a sour, Grinchy frown,\nAt the warm lighted windows below in their town.\nFor he knew every Who down in Whoville beneath,\nWas busy now, hanging a mistletoe wreath.\n\n"And they\'re hanging their stockings!" he snarled with a sneer,\n"Tomorrow is Christmas! It\'s practically here!"\nThen he growled, with his Grinch fingers nervously drumming,\n"I MUST find some way to stop Christmas from coming!"\nFor Tomorrow, he knew, all the Who girls and boys,\nWould wake bright and early. They\'d rush for their toys!\nAnd then! Oh, the noise! Oh, the Noise!\nNoise! Noise! Noise!\nThat\'s one thing he hated! The NOISE!\nNOISE! NOISE! NOISE!\n\nThen the Whos, young and old, would sit down to a feast.\nAnd they\'d feast! And they\'d feast! And they\'d FEAST!\nFEAST! FEAST! FEAST!\nThey would feast on Who-pudding, and rare Who-roast beast.\nWhich was something the Grinch couldn\'t stand in the least!\n\nAnd THEN They\'d do something He liked least of all!\nEvery Who down in Whoville, the tall and the small,\nWould stand close together, with Christmas bells ringing.\nThey\'d stand hand-in-hand. And the Whos would start singing!\nThey\'d sing! And they\'d sing! And they\'d SING!\nSING! SING! SING!\n\nAnd the more the Grinch thought of this Who-Christmas-Sing,\nThe more the Grinch thought, "I must stop this whole thing!"\n"Why, for fifty-three years I\'ve put up with it now!"\n"I MUST stop this Christmas from coming! But HOW?"\n\nThen he got an idea! An awful idea!\nTHE GRINCH GOT A WONDERFUL, AWFUL IDEA!\n\n"I know just what to do!" The Grinch laughed in his throat.\nAnd he made a quick Santy Claus hat and a coat.\nAnd he chuckled, and clucked, "What a great Grinchy trick!"\n"With this coat and this hat, I look just like Saint Nick!"\n\n"All I need is a reindeer..."\nThe Grinch looked around.\nBut, since reindeer are scarce, there was none to be found.\nDid that stop the old Grinch?\nNo! The Grinch simply said,\n"If I can\'t find a reindeer, I\'ll make one instead!"\nSo he called his dog, Max. Then he took some red thread,\nAnd he tied a big horn on the top of his head.\n\nTHEN\nHe loaded some bags\nAnd some old empty sacks,\nOn a ramshackle sleigh\nAnd he hitched up old Max.\n\nThen the Grinch said, "Giddap!"\nAnd the sleigh started down,\nToward the homes where the Whos\nLay asnooze in their town.\n\nAll their windows were dark. Quiet snow filled the air.\nAll the Whos were all dreaming sweet dreams without care.\nWhen he came to the first little house on the square.\n"This is stop number one," the old Grinchy Claus hissed,\nAnd he climbed to the roof, empty bags in his fist.\n\nThen he slid down the chimney. A rather tight pinch.\nBut, if Santa could do it, then so could the Grinch.\nHe got stuck only once, for a moment or two.\nThen he stuck his head out of the fireplace flue.\nWhere the little Who stockings all hung in a row.\n"These stockings," he grinned, "are the first things to go!"\n\nThen he slithered and slunk, with a smile most unpleasant,\nAround the whole room, and he took every present!\nPop guns! And bicycles! Roller skates! Drums!\nCheckerboards! Tricycles! Popcorn! And plums!\nAnd he stuffed them in bags. Then the Grinch, very nimbly,\nStuffed all the bags, one by one, up the chimney!\n\nThen he slunk to the icebox. He took the Whos\' feast!\nHe took the Who-pudding! He took the roast beast!\nHe cleaned out that icebox as quick as a flash.\nWhy, that Grinch even took their last can of Who-hash!\n\nThen he stuffed all the food up the chimney with glee.\n"And NOW!" grinned the Grinch, "I will stuff up the tree!"\n\nAnd the Grinch grabbed the tree, and he started to shove,\nWhen he heard a small sound like the coo of a dove.\nHe turned around fast, and he saw a small Who!\nLittle Cindy-Lou Who, who was not more than two.\n\nThe Grinch had been caught by this tiny Who daughter,\nWho\'d got out of bed for a cup of cold water.\nShe stared at the Grinch and said, "Santy Claus, why,\n"Why are you taking our Christmas tree? WHY?"\n\nBut, you know, that old Grinch was so smart and so slick,\nHe thought up a lie, and he thought it up quick!\n"Why, my sweet little tot," the fake Santy Claus lied,\n"There\'s a light on this tree that won\'t light on one side."\n"So I\'m taking it home to my workshop, my dear."\n"I\'ll fix it up there. Then I\'ll bring it back here."\n\nAnd his fib fooled the child. Then he patted her head,\nAnd he got her a drink and he sent her to bed.\nAnd when Cindy-Lou Who went to bed with her cup,\nHE went to the chimney and stuffed the tree up!\n\nThen the last thing he took\nWas the log for their fire!\nThen he went up the chimney, himself, the old liar.\nOn their walls he left nothing but hooks and some wire.\n\nAnd the one speck of food\nThat he left in the house,\nWas a crumb that was even too small for a mouse.\n\nThen\nHe did the same thing To the other Whos\' houses\n\nLeaving crumbs\nMuch too small\nFor the other Whos\' mouses!\n\nIt was quarter past dawn...\nAll the Whos, still a-bed,\nAll the Whos, still asnooze\nWhen he packed up his sled,\nPacked it up with their presents! The ribbons! The wrappings!\nThe tags! And the tinsel! The trimmings! The trappings!\n\nThree thousand feet up! Up the side of Mt. Crumpit,\nHe rode with his load to the tiptop to dump it!\n"Pooh-pooh to the Whos!" he was grinchishly humming.\n"They\'re finding out now that no Christmas is coming!"\n"They\'re just waking up! I know just what they\'ll do!"\n"Their mouths will hang open a minute or two,\nThen the Whos down in Whoville will all cry Boo-Hoo!"\n\n"That\'s a noise," grinned the Grinch,\n"That I simply MUST hear!"\nSo he paused. And the Grinch put his hand to his ear.\nAnd he did hear a sound rising over the snow.\nIt started in low. Then it started to grow.\n\nBut the sound wasn\'t sad! Why, this sound sounded merry!\nIt couldn\'t be so! But it WAS merry! VERY!\nHe stared down at Whoville! The Grinch popped his eyes!\nThen he shook! What he saw was a shocking surprise!\n\nEvery Who down in Whoville, the tall and the small,\nWas singing! Without any presents at all!\nHe HADN\'T stopped Christmas from coming!\nIT CAME!\nSomehow or other, it came just the same!\n\nAnd the Grinch, with his grinch-feet ice-cold in the snow,\nStood puzzling and puzzling: "How could it be so?"\n"It came with out ribbons! It came without tags!"\n"It came without packages, boxes or bags!"\nAnd he puzzled three hours, till his puzzler was sore.\nThen the Grinch thought of something he hadn\'t before!\n"Maybe Christmas," he thought, "doesn\'t come from a store."\n"Maybe Christmas...perhaps...means a little bit more!"\n\nAnd what happened then?\nWell...in Whoville they say,\nThat the Grinch\'s small heart\nGrew three sizes that day!\nAnd the minute his heart didn\'t feel quite so tight,\nHe whizzed with his load through the bright morning light,\nAnd he brought back the toys! And the food for the feast!\nAnd he, HE HIMSELF! The Grinch carved the roast beast!\n', 'UP PUP Pup is up.\nCUP PUP Pup in cup.\nPUP CUP Cup on pup.\nMOUSE HOUSE Mouse on house.\nHOUSE MOUSE House on mouse.\nALL TALL We all are tall.\nALL SMALL We all are small.\nALL BALL We all play ball.\nBALL WALL Up on a wall.\nALL FALL Fall off the wall.\nDAY PLAY We play all day.\nNIGHT FIGHT We fight all night.HE ME He is after me.\nHIM JIM Jim is after him.\nSEE BEE We see a bee.\nSEE BEE THREE Now we see three.\nTHREE TREE Three fish in a tree.\nFish in a tree? How can that be?\nRED RED They call me Red.\nRED BED I am in bed.\nRED NED TED and ED in BED\nPAT PAT they call him Pat.\nPAT SAT Pat sat on hat.\nPAT CAT Pat sat on cat.\nPAT BAT Pat sat on bat.\nNO PAT NO Don’t sit on that.\nSAD DAD BAD HAD Dad is sad.\nVery, very sad.\nHe had a bad day. What a day Dad had!\nTHING THING What is that thing?\nTHING SING That thing can sing!\nSONG LONG A long, long song.\nGood-by, Thing. You sing too long.\nWALK WALK We like to walk.\nWALK TALK We like to talk.\nHOP POP We like to hop.\nWe like to hop on top of Pop.\nSTOP You must not hop on Pop.\nMr. BROWN Mrs. BROWN\nMr. Brown upside down.\nPup up. Brown down.\nPup is down. Where is Brown?\nWHERE IS BROWN? THERE IS BROWN!\nMr. Brown is out of town.\nBACK BLACK Brown came back.\nBrown came back with Mr. Black.\nSNACK SNACK Eat a snack.\nEat a snack with Brown and Black.\nJUMP BUMP He jumped. He bumped.\nFAST PAST He went past fast.\nWENT TENT SENT He went into the tent.\nI sent him out of the tent.\nWET GET Two dogs get wet.\nHELP YELP They yelp for help.\nHILL WILL Will went up hill.\nWILL HILL STILL Will is up hill still.\nFATHER MOTHER SISTER BROTHER\nThat one is my other brother.\nMy brothers read a little bit.\nLittle words like If and it.\nMy father can read big words, too.\nLike CONSTANTINOPLE and TIMBUKTU\nSAY SAY What does this say?\nseehemewe\npatpuppop\nhethreetreebee\ntophopstop\nAsk me tomorrow but not today.\n', 'On the fifteenth of May, in the jungle of Nool,\nIn the heat of the day, in the cool of the pool,\nHe was splashing...enjoying the jungle\'s great joys...\nWhen Horton the elephant heard a small noise.\n\nSo Horton stopped splashing. He looked towards the sound.\n"That\'s funny," thought Horton. "There\'s no one around."\nThen he heard it again! Just a very faint yelp\nAs if some tiny person were calling for help.\n"I\'ll help you," said Horton. "But who are you? Where?"\nHe looked and he looked. He could see nothing there\nBut a small speck of dust blowing past though the air.\n\n"I say!" murmured Horton. "I\'ve never heard tell\nOf a small speck of dust that is able to yell.\nSo you know what I think?...Why, I think that there must\nBe someone on top of that small speck of dust!\nSome sort of a creature of very small size,\ntoo small to be seen by an elephant\'s eyes...\n\n"...some poor little person who\'s shaking with fear\nThat he\'ll blow in the pool! He has no way to steer!\nI\'ll just have to save him. Because, after all,\nA person\'s a person, no matter how small."\n\nSo, gently, and using the greatest of care,\nThe elephant stretched his great trunk through the air,\nAnd he lifted the dust speck and carried it over\nAnd placed it down, safe, on a very soft clover.\n\n"Humpf!" humpfed a voice. Twas a sour Kangaroo.\nAnd the young kangaroo in he pouch said "Humpf!" too\n"Why, that speck is as small as the head of a pin.\nA person on that?...why, there never has been!"\n\n"Believe me," said Horton. "I tell you sincerely,\nMy ears are quite keen and I heard him quite clearly.\nI know there\'s a person down there. And, what\'s more,\nQuite likely there\'s two. Even three. Even four.\nQuite likely...\n\n"...a family, for all that we know!\nA family with children just starting to grow.\nSo, please," Horton said, "as a favour to me,\nTry not to disturb them. Just let them be."\n\n"I think you\'re a fool!" laughed the sour kangaroo\nAnd the young kangaroo in her pouch said, "Me, too!\nYou\'re the biggest blame fool in the jungle of Nool!"\nAnd the kangaroos plunged in the cool of the pool.\n"What terrible splashing!" the elephant frowned.\n"I can\'t let my very small persons get drowned!\nI\'ve got to protect them. I\'m bigger than they."\nSo he plucked up the clover and hustled away.\n\nThrough the high jungle tree tops, the news quickly spread:\n"He talks to a dust speck! He\'s out of his head!\nJust look at him walk with that speck on the flower!"\nAnd Horton walked, worrying, almost an hour.\n"Should I put this speck down?..." Horton though with alarm.\n"If I do, these small persons may come to great harm.\nI can\'t put it down. And I won\'t! After all\nA person\'s a person. No matter how small."\n\nThen Horton stopped walking.\nThe speck-voice was talking!\nThe voice was so faint he could just barely hear it.\n"Speak up, please," Said Horton. He put his ear near it.\n"My friend," came the voice, "you\'re a very fine friend.\nYou\'ve helped all us folks on this dust speck no end.\nYou\'ve saved all our houses, our ceilings and floors.\nYou\'ve saved all our churches and grocery stores."\n\n"You mean..." Horton gasped, "you have buildings there, too?"\n"Oh, yes," piped the voice. "We most certainly do...\n"I know," called the voice, "I\'m too small to be seen\nBut I\'m Mayor of a town that is friendly and clean.\nOur buildings, to you, would seem terribly small\nBut to us, who aren\'t big, they are wonderfully tall.\nMy town is called Who-ville, for I am a Who\nAnd we Whos are all thankful and greatful to you"\n\nAnd Horton called back to the Mayor of the town,\n"You\'re safe now. Don\'t worry. I won\'t let you down."\n\nBut, Just as he spoke to the Mayor of the speck,\nThree big jungle monkeys climbed up Horton\'s neck!\nThe Wickersham Brothers came shouting, "What rot!\nThis elephants talking to Whos who are not!\nThere aren\'t any Whos! And they don\'t have a Mayor!\nAnd we\'re going to stop all this nonsense! So there!"\n\nThey snatched Horton\'s clover! They carried it off\nTo a black-bottomed eagle named Valad Vlad-I-koff,\nA mighty strong eagle, of very swift wing,\nAnd they said, "Will you kindly get rid of this thing?"\nAnd, before the poor elephant could even speak,\nThat eagle flew off with the flower in his beak.\n\nAll that late afternoon and far into the night\nThat black-bottomed bird flapped his wings in fast flight,\nWhile Horton chased after, with groans, over stones\nThat tattered his toenails and battered his bones,\nAnd begged, "Please don\'t harm all my little folks, who\nHave as much right to live as us bigger folk do!"\n\nBut far, far beyond him, that eagle kept flapping\nAnd over his shoulder called back, "Quit your yapping.\nI\'ll fly the night through. I\'m a bird. I don\'t mind it.\nAnd I\'ll hide this, tomorrow, where you\'ll never find it!"\n\nAnd at 6:56 the next morning he did it.\nIt sure was a terrible place that he hid it.\nHe let that small clover drop somewhere inside\nOf a great patch of clovers a hundred miles wide!\n"Find THAT!" sneered the bird. "But I think you will fail."\nAnd he left\nWith a flip\nOf his black-bottomed tail.\n\n"I\'ll find it!" cried Horton. "I\'ll find it or bust!\nI SHALL find my friends on my small speck of dust!"\nAnd clover, by clover, by clover with care\nHe picked up and searched the, and called, "Are you there?"\nBut clover, by clover, by clover he found\nThat the one that he sought for was just not around.\nAnd by noon poor old Horton, more dead than alive,\nHad picked, searched, and piled up, nine thousand and five.\n\nThen, on through the afternoon, hour after hour...\nTill he found them at last! On the three millionth flower!\n"My friends!" cried the elephant. "Tell me! Do tell!\nAre you safe? Are you sound? Are you whole? Are you well?"\n\nFrom down on the speck came the voice of the Mayor:\n"We\'ve really had trouble! Much more than our share.\nWhen that black-bottomed birdie let go and we dropped,\nWe landed so hard that our clocks have all stopped.\nOur tea pots are broken. Our rocking-chairs are smashed.\nAnd our bicycle tires all blew up when we crashed.\nSo, Horton, Please!" pleaded that voice of the Mayor\'s,\n"Will you stick by us Whos while we\'re making repairs?"\n\n"Of course," Horton answered. "Of course I will stick.\nI\'ll stick by you small folks though thin and though thick!"\n\n"Humpf!" humpfed a voice!\n"For almost two days you\'ve run wild and insisted\nOn chatting with persons who\'ve never existed.\nSuch carryings-on in our peaceable jungle!\nWe\'ve had quite enough of your bellowing bungle!\nAnd I\'m here to state," snapped the big kangaroo,\n"That your silly nonsensical game is all through!"\nAnd the young kangaroo in her pouch said, "Me, too!"\n\n"With the help of the Wickersham Brothers and dozens\nOf Wickersham Uncles and Wickershams Cousins\nAnd Wickersham In-Laws, whose help I\'ve engaged,\nYou\'re going to be roped! And you\'re going to be caged!\nAnd, as for your dust speck...hah!\nThat we shall boil\nIn a hot steaming kettle of Beezle-Nut Oil!"\n"Boil it?..." gasped Horton!\n"Oh, that you can\'t do!\nIt\'s all full of persons!\nThey\'ll prove it to you!"\n\n"Mr. Mayor! Mr. Mayor!" Horton called. "Mr. Mayor!\nYou\'ve got to prove that you really are there!\nSo call a big meeting. Get everyone out.\nMake every Who holler! Make every Who shout!\nMake every Who scream! If you don\'t, every Who\nIs going to end up in a Beezle-Nut stew!"\n\nAnd, down on the dust speck, the scared little Mayor\nQuick called a big meeting in Who-ville Town Square.\nAnd his people cried loudly. They cried out in fear:\n"We are here! We are here! We are here!"\n\nThe elephant smiled: "That was clear as a bell.\nYou Kangaroos surely heard that very well."\n"All I heard," snapped the big kangaroo, "Was the breeze,\nAnd the faint sound of wind through the far-distant trees.\nI heard no small voices. And you didn\'t either."\nAnd the you kangaroo in her pouch said, "Me, neither."\n\n"Grab him!" they shouted. "And cage the big dope!\nLasso his stomach with ten miles of rope!\nTie the knots tight so he\'ll never shake lose!\nThen dunk that dumb speck in the Beezle-Nut juice!"\n\n\n\nHorton fought back with great vigor and vim\nBut the Wickersham gang was too many for him.\nThey beat him! They mauled him! They started to haul\nHim into his cage! But he managed to call\nTo the Mayor: "Don\'t give up! I believe in you all\nA person\'s a person, no matter how small!\nAnd you very small persons will not have to die\nIf you make yourselves heard! So come on, now, and TRY!"\n\nThe Mayor grabbed a tom-tom. He started to smack it.\nAnd, all over Who-ville, they whooped up a racked.\nThey rattled tie kettles! They beat on brass pans,\nOn garbage pail tops and old cranberry cans!\nThey blew on bazooka and blasted great toots\nOn clarinets, oom-pahs and boom-pahs and flutes!\n\nGreat gusts of loud racket rang high through the air.\nThey rattled and shook the whole sky! And the Mayor\nCalled up through the howling mad hullabaloo:\n"Hey Horton! Hows this? Is our sound coming through?"\n\nAnd Horton called back, "I can hear you just fine.\nBut the kangaroos\' ears aren\'t as strong, quite, as mine.\nThey don\'t hear a thing! Are you sure all you boys\nAre doing their best? Are they ALL making noise?\nAre you sure every Who down in Who-ville is working?\nQuick! Look through your town! Is there anyone shirking?"\n\nThrough the town rushed the Mayor, From the east to the west.\nBut everyone seemed to be doing his best.\nEveryone seemed to be yapping or yipping!\nEveryone seemed to be beeping or bipping!\nBut it wasn\'t enough, all this ruckus and roar!\nHe HAD to find someone to help him make more.\nHe raced through each building! He searched floor-to-floor!\n\nAnd, just as he felt he was getting nowhere,\nAnd almost about to give up in despair,\nHe suddenly burst through a door and that Mayor\nDiscovered one shirker! Quite hidden away\nIn the Fairfax Apartments (Apartment 12-J)\nA very small, very small shirker named Jo-Jo\nwas standing, just standing, and bouncing a Yo-Yo!\nNot making a sound! Not a yipp! Not a chirp!\nAnd the Mayor rushed inside and he grabbed the young twerp!\n\nAnd he climbed with the lad up the Eiffelberg Tower.\n"This," cried the Mayor, "is your towns darkest hour!\nThe time for all Whos who have blood that is red\nTo come to the aid of their country!" he said.\n"We\'ve GOT to make noises in greater amounts!\nSo, open your mouth, lad! For every voice counts!"\n\nThus he spoke as he climbed. When they got to the top,\nThe lad cleared his throat and he shouted out, "YOPP!"\n\nAnd that Yopp...\nThat one small, extra Yopp put it over!\nFinally, at last! From that speck on that clover\nTheir voices were heard! They rang out clear and clean.\nAnd the elephant smiled. "Do you see what I mean?...\nThey\'ve proved they ARE persons, no matter how small.\nAnd their whole world was saved by the smallest of All!"\n\n"How true! Yes, how true," said the big kangaroo.\n"And, from now on, you know what I\'m planning to do?...\nFrom now on, I\'m going to protect them with you!"\nAnd the young kangaroo in her pouch said...\n"...ME, TOO!"\n"From the sun in the summer. From rain when it\'s fall-ish,\nI\'m going to protect them. No matter how small-ish!"\n', 'Congratulations!\nToday is your day.\nYou\'re off to Great Places!\nYou\'re off and away!\n\nYou have brains in your head.\nYou have feet in your shoes\nYou can steer yourself\nAny direction you choose.\nYou\'re on your own. And you know what you know.\nAnd YOU are the guy who\'ll decide where to go.\n\nYou\'ll look up and down streets. Look \'em over with care.\nAbout some you will say, "I don\'t choose to go there."\nWith your head full of brains and your shoes full of feet,\nYou\'re too smart to go down any not-so-good street.\n\nAnd you may not find any\nYou\'ll want to go down.\nIn that case, of course,\nYou\'ll head straight out of town.\n\nIt\'s opener there\nIn the wide open air.\n\nOut there things can happen\nAnd frequently do\nTo people as brainy\nAnd footsy as you.\n\nAnd when things start to happen,\nDon\'t worry. Don\'t stew.\nJust go right along.\nYou\'ll start happening too.\n\nOH!\nTHE PLACES YOU\'LL GO!\n\nYou\'ll be on your way up!\nYou\'ll be seeing great sights!\nYou\'ll join the high fliers\nWho soar to high heights.\n\nYou won\'t lag behind, because you\'ll have the speed.\nYou\'ll pass the whole gang and you\'ll soon take the lead.\nWherever you fly, you\'ll be the best of the best.\nWherever you go, you will top all the rest.\n\nExcept when you don\' t\nBecause, sometimes, you won\'t.\n\nI\'m sorry to say so\nbut, sadly, it\'s true\nthat Bang-ups\nand Hang-ups\ncan happen to you.\n\nYou can get all hung up\nin a prickle-ly perch.\nAnd your gang will fly on.\nYou\'ll be left in a Lurch.\n\nYou\'ll come down from the Lurch\nwith an unpleasant bump.\nAnd the chances are, then,\nthat you\'ll be in a Slump.\n\nAnd when you\'re in a Slump,\nyou\'re not in for much fun.\nUn-slumping yourself\nis not easily done.\n\nYou will come to a place where the streets are not marked.\nSome windows are lighted. But mostly they\'re darked.\nA place you could sprain both your elbow and chin!\nDo you dare to stay out? Do you dare to go in?\nHow much can you lose? How much can you win?\n\nAnd IF you go in, should you turn left or right...\nor right-and-three-quarters? Or, maybe, not quite?\nOr go around back and sneak in from behind?\nSimple it\'s not, I\'m afraid you will find,\nfor a mind-maker-upper to make up his mind.\n\nYou can get so confused\nthat you\'ll start in to race\ndown long wiggled roads at a break-necking pace\nand grind on for miles cross weirdish wild space,\nheaded, I fear, toward a most useless place.\nThe Waiting Place...\n\n...for people just waiting.\nWaiting for a train to go\nor a bus to come, or a plane to go\nor the mail to come, or the rain to go\nor the phone to ring, or the snow to snow\nor the waiting around for a Yes or No\nor waiting for their hair to grow.\nEveryone is just waiting.\n\nWaiting for the fish to bite\nor waiting for the wind to fly a kite\nor waiting around for Friday night\nor waiting, perhaps, for their Uncle Jake\nor a pot to boil, or a Better Break\nor a string of pearls, or a pair of pants\nor a wig with curls, or Another Chance.\nEveryone is just waiting.\n\nNO!\nThat\'s not for you!\n\nSomehow you\'ll escape\nall that waiting and staying\nYou\'ll find the bright places\nwhere Boom Bands are playing.\n\nWith banner flip-flapping,\nonce more you\'ll ride high!\nReady for anything under the sky.\nReady because you\'re that kind of a guy!\n\nOh, the places you\'ll go! There is fun to be done!\nThere are points to be scored. There are games to be won.\nAnd the magical things you can do with that ball\nwill make you the winning-est winner of all.\nFame! You\'ll be as famous as famous can be,\nwith the whole wide world watching you win on TV.\n\nExcept when they don\'t\nBecause, sometimes they won\'t.\n\nI\'m afraid that some times\nyou\'ll play lonely games too.\nGames you can\'t win\n\'cause you\'ll play against you.\n\nAll Alone!\nWhether you like it or not,\nAlone will be something\nyou\'ll be quite a lot.\n\nAnd when you\'re alone, there\'s a very good chance\nyou\'ll meet things that scare you right out of your pants.\nThere are some, down the road between hither and yon,\nthat can scare you so much you won\'t want to go on.\n\nBut on you will go\nthough the weather be foul.\nOn you will go\nthough your enemies prowl.\nOn you will go\nthough the Hakken-Kraks howl.\nOnward up many\na frightening creek,\nthough your arms may get sore\nand your sneakers may leak.\n\nOn and on you will hike,\nAnd I know you\'ll hike far\nand face up to your problems\nwhatever they are.\n\nYou\'ll get mixed up, of course,\nAs you already know.\nYou\'ll get mixed up\nWith many strange birds as you go.\nSo be sure when you step.\nStep with care and great tact\nAnd remember that Life\'s\nA Great Balancing Act.\nJust never forget to be dexterous and deft.\nAnd never mix up your right foot with your left.\n\nAnd will you succeed?\nYes! You will, indeed!\n(98 and 3/4 percent guaranteed.)\n\nKID, YOU\'LL MOVE MOUNTAINS!\n\nSo...\nBe your name Buxbaum or Bixby or Bray\nOr Mordecai Ali Van Allen O\'Shea,\nYou\'re off to Great Places!\nToday is your day!\nYour mountain is waiting.\nSo...get on your way!\n', 'One fish, two fish, red fish, blue fish,\nBlack fish, blue fish, old fish, new fish.\nThis one has a little car.\nThis one has a little star.\nSay! What a lot of fish there are.\nYes. Some are red, and some are blue.\nSome are old. And some are new.\nSome are sad. And some are glad.\nAnd some are very, very bad.\nWhy are they sad and glad and bad?\nI do not know, go ask your dad.\nSome are thin. And some are fat.\nThe fat one has a yellow hat.\nFrom there to here,\nfrom here to there,\nfunny things are everywhere.\n\nHere are some who like to run.\nThey run for fun in the hot, hot sun.\nOh me! Oh my! Oh me! oh my!\nWhat a lot of funny things go by.\nSome have two feet and some have four.\nSome have six feet and some have more.\nWhere do they come from? I can\'t say.\nBut I bet they have come a long, long way.\nWe see them come, we see them go.\nSome are fast. And some are slow.\nSome are high. And some are low.\nNot one of them is like another.\nDon\'t ask us why, go ask your mother.\n\nSay! Look at his fingers!\nOne, two, three...\nHow many fingers do I see?\nOne, two, three, four,\nfive, six, seven, eight, nine, ten.\nHe has eleven!\nEleven! This is something new.\nI wish I had eleven too!\n\nBump! Bump! Bump!\nDid you ever ride a Wump?\nWe have a Wump with just one hump.\nBut we know a man called Mr. Gump.\nMr. Gump has a seven hump Wump. So...\nIf you like to go Bump! Bump!\nJust jump on the hump of the Wump of Gump.\n\nWho am I? My name is Ned\nI do not like my little bed.\nThis is no good. This is not right.\nMy feet stick out of bed all night.\nAnd when I pull them in,\nOh, dear!\nMy head sticks out of bed up here!\n\nWe like our bike. It is made for three.\nOur Mike sits up in back, you see.\nWe like our Mike, and this is why:\nMike does all the work when the hills get high.\n\nHello there, Ned. How do you do?\nTell me, tell me what is new?\nHow are things in your little bed?\nWhat is new? Please tell me Ned.\n\nI do not like this bed at all.\na lot of things have come to call.\nA cow, a dog, a cat, a mouse.\nOh! what a bed! Oh! what a house!\n\nOh, dear! Oh, dear! I cannot hear.\nWill you please come over near?\nWill you please look in my ear?\nThere must be something there, I fear.\nSay look! A bird was in your ear.\nBut he is out. So have no fear.\nAgain your ear can hear, my dear.\n\nMy hat is old. My teeth are gold.\nI have a bird I like to hold.\nMy shoe is off. My foot is cold.\nMy shoe is off. My foot is cold.\nI have a bird I like to hold.\nMy hat is old. My teeth are gold.\nAnd now my story is all told.\n\nWe took a look. We saw a Nook.\nOn his head he had a hook.\nOn his hook he had a book.\nOn his book was "How to Cook."\nWe saw him sit and try to cook.\nHe took a look at the book on the hook.\nBut a Nook can\'t read, so a Nook can\'t cook.\nSO...\nWhat good to a Nook is a hook cook book?\n\nThe moon was out and we saw some sheep.\nWe saw some sheep take a walk in their sleep.\nBy the light of the moon, by the light of a star,\nthey walked all night from near to far.\nI would never walk. I would take a car.\n\nI do not like this one so well.\nAll he does is yell, yell, yell.\nI will not have this one about.\nWhen he comes in I put him out.\nThis one is quiet as a mouse.\nI like to have him in the house.\n\nAt our house we open cans.\nWe have to open many cans.\nand that is why we have a Zans.\nA Zans for cans is very good.\nHave you a Zans for cans? You should.\n\nI like to box. How I like to box.\nSo, every day, I box a Gox.\nIn yellow socks I box my Gox.\nI box in yellow Gox box socks.\n\nIt is fun to sing if you sing with a Ying.\nMy Ying can sing like anything.\nI sing high and my Ying sings low.\nAnd we are not too bad, you know.\n\nThis one, I think, is called a Yink.\nHe likes to wink, he likes to drink.\nHe likes to drink, and drink, and drink.\nThe thing he likes to drink is ink.\nThe ink he likes to drink is pink.\nHe likes to wink and drink pink ink.\nSO...\nIf you have a lot of ink,\nthen you should get a Yink, I think.\n\nHop! Hop! Hop! I am a Yop\nAll I like to do is hop,\nFrom finger top to finger top.\nI hop from left to right and then...\nHop! Hop! I hop right back again.\nI like to hop all day and night.\nFrom right to left and left to right.\nWhy do I like to hop, hop, hop?\nI do not know. Go ask your Pop.\n\nBrush! Brush! Brush! Brush!\nComb! Comb! Comb! Comb!\nBlue hair is fun to brush and comb.\nAll girls who like to brush and comb,\nShould have a pet like this at home.\n\nWho is this pet? Say! He is wet.\nYou never yet met a pet, I bet,\nas wet as they let this wet pet get.\n\nDid you ever fly a kite in bed?\nDid you ever walk with ten cats on your head?\nDid you ever milk this kind of cow?\nWell, we can do it. We know how.\nIf you never did, you should.\nThese things are fun, and fun is good.\n\nHello! Hello! Are you there?\nHello! I called you up to say hello.\nI said hello.\nCan you hear me, Joe?\nOh no. I cannot hear your call.\nI cannot hear your call at all.\nThis is not good and I know why.\nA mouse has cut the wire. Good-by!\n\nFrom near to far, from here to there,\nFunny things are everywhere.\nThese yellow pets are called the Zeds.\nThey have one hair upon their heads.\nTheir hair grows fast. So fast they say,\nThey need a haircut every day.\n\nWho am I? My name is Ish\nOn my hand I have a dish.\nI have this dish to help me wish.\nWhen I wish to make a wish\nI wave my hand with a big swish swish.\nThen I say, "I wish for fish!"\nAnd I get fish right on my dish.\nSo...\nIf you wish to make a wish,\nyou may swish for fish with my Ish wish dish.\n\nAt our house we play out back.\nWe play a game called Ring the Gack.\nWould you like to play this game?\nCome down! We have the only Gack in town.\n\nLook what we found in the park in the dark.\nWe will take him home, we will call him Clark.\nHe will live at our house, he will grow and grow.\nWill our mother like this? We don\'t know.\n\nAnd now good night.\nIt is time to sleep\nSo we will sleep with our pet Zeep.\nToday is gone. Today was fun.\nTomorrow is another one.\nEvery day, from here to there.\nfunny things are everywhere.\n'])Bag-of-Words Representation

In the bag-of-words representation in this data, each column represents a word, and the values in the column are the word counts for that document.

First, we need to split each document into individual words.

['UP', 'PUP', 'Pup', 'is', 'up.', 'CUP', 'PUP', 'Pup', 'in', 'cup.', 'PUP', 'CUP', 'Cup', 'on', 'pup.', 'MOUSE', 'HOUSE', 'Mouse', 'on', 'house.', 'HOUSE', 'MOUSE', 'House', 'on', 'mouse.', 'ALL', 'TALL', 'We', 'all', 'are', 'tall.', 'ALL', 'SMALL', 'We', 'all', 'are', 'small.', 'ALL', 'BALL', 'We', 'all', 'play', 'ball.', 'BALL', 'WALL', 'Up', 'on', 'a', 'wall.', 'ALL', 'FALL', 'Fall', 'off', 'the', 'wall.', 'DAY', 'PLAY', 'We', 'play', 'all', 'day.', 'NIGHT', 'FIGHT', 'We', 'fight', 'all', 'night.HE', 'ME', 'He', 'is', 'after', 'me.', 'HIM', 'JIM', 'Jim', 'is', 'after', 'him.', 'SEE', 'BEE', 'We', 'see', 'a', 'bee.', 'SEE', 'BEE', 'THREE', 'Now', 'we', 'see', 'three.', 'THREE', 'TREE', 'Three', 'fish', 'in', 'a', 'tree.', 'Fish', 'in', 'a', 'tree?', 'How', 'can', 'that', 'be?', 'RED', 'RED', 'They', 'call', 'me', 'Red.', 'RED', 'BED', 'I', 'am', 'in', 'bed.', 'RED', 'NED', 'TED', 'and', 'ED', 'in', 'BED', 'PAT', 'PAT', 'they', 'call', 'him', 'Pat.', 'PAT', 'SAT', 'Pat', 'sat', 'on', 'hat.', 'PAT', 'CAT', 'Pat', 'sat', 'on', 'cat.', 'PAT', 'BAT', 'Pat', 'sat', 'on', 'bat.', 'NO', 'PAT', 'NO', 'Don’t', 'sit', 'on', 'that.', 'SAD', 'DAD', 'BAD', 'HAD', 'Dad', 'is', 'sad.', 'Very,', 'very', 'sad.', 'He', 'had', 'a', 'bad', 'day.', 'What', 'a', 'day', 'Dad', 'had!', 'THING', 'THING', 'What', 'is', 'that', 'thing?', 'THING', 'SING', 'That', 'thing', 'can', 'sing!', 'SONG', 'LONG', 'A', 'long,', 'long', 'song.', 'Good-by,', 'Thing.', 'You', 'sing', 'too', 'long.', 'WALK', 'WALK', 'We', 'like', 'to', 'walk.', 'WALK', 'TALK', 'We', 'like', 'to', 'talk.', 'HOP', 'POP', 'We', 'like', 'to', 'hop.', 'We', 'like', 'to', 'hop', 'on', 'top', 'of', 'Pop.', 'STOP', 'You', 'must', 'not', 'hop', 'on', 'Pop.', 'Mr.', 'BROWN', 'Mrs.', 'BROWN', 'Mr.', 'Brown', 'upside', 'down.', 'Pup', 'up.', 'Brown', 'down.', 'Pup', 'is', 'down.', 'Where', 'is', 'Brown?', 'WHERE', 'IS', 'BROWN?', 'THERE', 'IS', 'BROWN!', 'Mr.', 'Brown', 'is', 'out', 'of', 'town.', 'BACK', 'BLACK', 'Brown', 'came', 'back.', 'Brown', 'came', 'back', 'with', 'Mr.', 'Black.', 'SNACK', 'SNACK', 'Eat', 'a', 'snack.', 'Eat', 'a', 'snack', 'with', 'Brown', 'and', 'Black.', 'JUMP', 'BUMP', 'He', 'jumped.', 'He', 'bumped.', 'FAST', 'PAST', 'He', 'went', 'past', 'fast.', 'WENT', 'TENT', 'SENT', 'He', 'went', 'into', 'the', 'tent.', 'I', 'sent', 'him', 'out', 'of', 'the', 'tent.', 'WET', 'GET', 'Two', 'dogs', 'get', 'wet.', 'HELP', 'YELP', 'They', 'yelp', 'for', 'help.', 'HILL', 'WILL', 'Will', 'went', 'up', 'hill.', 'WILL', 'HILL', 'STILL', 'Will', 'is', 'up', 'hill', 'still.', 'FATHER', 'MOTHER', 'SISTER', 'BROTHER', 'That', 'one', 'is', 'my', 'other', 'brother.', 'My', 'brothers', 'read', 'a', 'little', 'bit.', 'Little', 'words', 'like', 'If', 'and', 'it.', 'My', 'father', 'can', 'read', 'big', 'words,', 'too.', 'Like', 'CONSTANTINOPLE', 'and', 'TIMBUKTU', 'SAY', 'SAY', 'What', 'does', 'this', 'say?', 'seehemewe', 'patpuppop', 'hethreetreebee', 'tophopstop', 'Ask', 'me', 'tomorrow', 'but', 'not', 'today.']Then Count the Words

Counter({'is': 10, 'on': 10, 'We': 10, 'a': 9, 'He': 6, 'PAT': 6, 'Brown': 6, 'in': 5, 'all': 5, 'like': 5, 'Pup': 4, 'ALL': 4, 'RED': 4, 'and': 4, 'to': 4, 'Mr.': 4, 'PUP': 3, 'the': 3, 'can': 3, 'Pat': 3, 'sat': 3, 'What': 3, 'THING': 3, 'WALK': 3, 'of': 3, 'down.': 3, 'went': 3, 'up.': 2, 'CUP': 2, 'MOUSE': 2, 'HOUSE': 2, 'are': 2, 'BALL': 2, 'play': 2, 'wall.': 2, 'day.': 2, 'after': 2, 'SEE': 2, 'BEE': 2, 'see': 2, 'THREE': 2, 'that': 2, 'They': 2, 'call': 2, 'me': 2, 'BED': 2, 'I': 2, 'him': 2, 'NO': 2, 'Dad': 2, 'sad.': 2, 'That': 2, 'You': 2, 'hop': 2, 'Pop.': 2, 'not': 2, 'BROWN': 2, 'IS': 2, 'out': 2, 'came': 2, 'with': 2, 'Black.': 2, 'SNACK': 2, 'Eat': 2, 'tent.': 2, 'HILL': 2, 'WILL': 2, 'Will': 2, 'up': 2, 'My': 2, 'read': 2, 'SAY': 2, 'UP': 1, 'cup.': 1, 'Cup': 1, 'pup.': 1, 'Mouse': 1, 'house.': 1, 'House': 1, 'mouse.': 1, 'TALL': 1, 'tall.': 1, 'SMALL': 1, 'small.': 1, 'ball.': 1, 'WALL': 1, 'Up': 1, 'FALL': 1, 'Fall': 1, 'off': 1, 'DAY': 1, 'PLAY': 1, 'NIGHT': 1, 'FIGHT': 1, 'fight': 1, 'night.HE': 1, 'ME': 1, 'me.': 1, 'HIM': 1, 'JIM': 1, 'Jim': 1, 'him.': 1, 'bee.': 1, 'Now': 1, 'we': 1, 'three.': 1, 'TREE': 1, 'Three': 1, 'fish': 1, 'tree.': 1, 'Fish': 1, 'tree?': 1, 'How': 1, 'be?': 1, 'Red.': 1, 'am': 1, 'bed.': 1, 'NED': 1, 'TED': 1, 'ED': 1, 'they': 1, 'Pat.': 1, 'SAT': 1, 'hat.': 1, 'CAT': 1, 'cat.': 1, 'BAT': 1, 'bat.': 1, 'Don’t': 1, 'sit': 1, 'that.': 1, 'SAD': 1, 'DAD': 1, 'BAD': 1, 'HAD': 1, 'Very,': 1, 'very': 1, 'had': 1, 'bad': 1, 'day': 1, 'had!': 1, 'thing?': 1, 'SING': 1, 'thing': 1, 'sing!': 1, 'SONG': 1, 'LONG': 1, 'A': 1, 'long,': 1, 'long': 1, 'song.': 1, 'Good-by,': 1, 'Thing.': 1, 'sing': 1, 'too': 1, 'long.': 1, 'walk.': 1, 'TALK': 1, 'talk.': 1, 'HOP': 1, 'POP': 1, 'hop.': 1, 'top': 1, 'STOP': 1, 'must': 1, 'Mrs.': 1, 'upside': 1, 'Where': 1, 'Brown?': 1, 'WHERE': 1, 'BROWN?': 1, 'THERE': 1, 'BROWN!': 1, 'town.': 1, 'BACK': 1, 'BLACK': 1, 'back.': 1, 'back': 1, 'snack.': 1, 'snack': 1, 'JUMP': 1, 'BUMP': 1, 'jumped.': 1, 'bumped.': 1, 'FAST': 1, 'PAST': 1, 'past': 1, 'fast.': 1, 'WENT': 1, 'TENT': 1, 'SENT': 1, 'into': 1, 'sent': 1, 'WET': 1, 'GET': 1, 'Two': 1, 'dogs': 1, 'get': 1, 'wet.': 1, 'HELP': 1, 'YELP': 1, 'yelp': 1, 'for': 1, 'help.': 1, 'hill.': 1, 'STILL': 1, 'hill': 1, 'still.': 1, 'FATHER': 1, 'MOTHER': 1, 'SISTER': 1, 'BROTHER': 1, 'one': 1, 'my': 1, 'other': 1, 'brother.': 1, 'brothers': 1, 'little': 1, 'bit.': 1, 'Little': 1, 'words': 1, 'If': 1, 'it.': 1, 'father': 1, 'big': 1, 'words,': 1, 'too.': 1, 'Like': 1, 'CONSTANTINOPLE': 1, 'TIMBUKTU': 1, 'does': 1, 'this': 1, 'say?': 1, 'seehemewe': 1, 'patpuppop': 1, 'hethreetreebee': 1, 'tophopstop': 1, 'Ask': 1, 'tomorrow': 1, 'but': 1, 'today.': 1})Bag-of-Words Representation

… then, we put these counts into a Series.

Bag-of-Words Representation

… then, we do this for every document.

[I 71

am 3

Sam 3

That 2

Sam-I-am 4

..

good 2

see! 1

So 1

Thank 2

you! 1

Length: 116, dtype: int64, The 4

sun 2

did 6

not 27

shine. 1

..

Now, 1

Well... 1

YOU 1

asked 1

YOU? 1

Length: 503, dtype: int64, Fox 6

Socks 4

Box 1

Knox 8

in 19

..

our 1

done, 1

Thank 1

lot 1

fun, 1

Length: 328, dtype: int64, Every 3

Who 9

Down 1

in 15

Whoville 4

..

light, 1

brought 1

he, 1

HIMSELF! 1

carved 1

Length: 623, dtype: int64, UP 1

PUP 3

Pup 4

is 10

up. 2

..

tophopstop 1

Ask 1

tomorrow 1

but 1

today. 1

Length: 241, dtype: int64, On 5

the 88

fifteenth 1

of 33

May, 1

..

summer. 1

rain 1

it's 1

fall-ish, 1

small-ish!" 1

Length: 918, dtype: int64, Congratulations! 1

Today 2

is 7

your 19

day. 1

..

day! 1

Your 1

mountain 1

So...get 1

way! 1

Length: 449, dtype: int64, One 1

fish, 7

two 2

red 1

blue 2

..

Tomorrow 1

another 1

one. 1

Every 1

there. 1

Length: 501, dtype: int64]Counter(doc.split())splits each document by word and counts word frequenciesfor doc in docs.values()iterates over every documentpd.Series()converts theCounter()object into a Pandas Series

Create a DataFrame

… finally, we stack the Series into a DataFrame. This is called bag of words data.

I am Sam ... gone. Tomorrow one.

green_eggs_and_ham.txt 71.0 3.0 3.0 ... NaN NaN NaN

cat_in_the_hat.txt 48.0 NaN NaN ... NaN NaN NaN

fox_in_socks.txt 9.0 NaN NaN ... NaN NaN NaN

how_the_grinch_stole_christmas.txt 6.0 NaN NaN ... NaN NaN NaN

hop_on_pop.txt 2.0 1.0 NaN ... NaN NaN NaN

horton_hears_a_who.txt 18.0 1.0 NaN ... NaN NaN NaN

oh_the_places_youll_go.txt 2.0 NaN NaN ... NaN NaN NaN

one_fish_two_fish.txt 48.0 3.0 NaN ... 1.0 1.0 1.0

[8 rows x 2562 columns]Bag-of-Words in Scikit-Learn

Alternatively, we can use CountVectorizer() in scikit-learn to produce a bag-of-words matrix.

Fit

CountVectorizer()In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook.

On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

Parameters

Entire Vocabulary

The set of words across a corpus is called the vocabulary. We can view the vocabulary in a fitted CountVectorizer() as follows:

{'am': 23, 'sam': 935, 'that': 1138, 'do': 287, 'not': 767, 'like': 644, 'you': 1336, 'green': 471, 'eggs': 326, 'and': 26, 'ham': 495, 'them': 1141, 'would': 1316, 'here': 526, 'or': 786, 'there': 1143, 'anywhere': 32, 'in': 576, 'house': 558, 'with': 1303, 'mouse': 722, 'eat': 323, 'box': 132, 'fox': 419, 'could': 242, 'car': 179, 'they': 1145, 'are': 35, 'may': 688, 'will': 1292, 'see': 953, 'tree': 1204, 'let': 635, 'me': 691, 'be': 62, 'mot': 718, 'train': 1202, 'on': 778, 'say': 944, 'the': 1139, 'dark': 265, 'rain': 884, 'goat': 453, 'boat': 118, 'so': 1035, 'try': 1213, 'if': 575, 'good': 459, 'thank': 1136, 'sun': 1107, 'did': 279, 'shine': 972, 'it': 586, 'was': 1255, 'too': 1188, 'wet': 1268, 'to': 1178, 'play': 836, 'we': 1261, 'sat': 940, 'all': 16, 'cold': 231, 'day': 270, 'sally': 934, 'two': 1220, 'said': 932, 'how': 560, 'wish': 1302, 'had': 488, 'something': 1042, 'go': 452, 'out': 789, 'ball': 50, 'nothing': 768, 'at': 43, 'sit': 1001, 'one': 780, 'little': 650, 'bit': 102, 'bump': 157, 'then': 1142, 'went': 1265, 'made': 673, 'us': 1231, 'jump': 594, 'looked': 660, 'saw': 943, 'him': 536, 'step': 1077, 'mat': 684, 'cat': 185, 'hat': 507, 'he': 513, 'why': 1285, 'know': 614, 'is': 583, 'sunny': 1108, 'but': 165, 'can': 176, 'have': 512, 'lots': 663, 'of': 773, 'fun': 434, 'funny': 435, 'some': 1039, 'games': 438, 'new': 747, 'tricks': 1207, 'lot': 662, 'show': 986, 'your': 1338, 'mother': 719, 'mind': 704, 'what': 1269, 'our': 788, 'for': 413, 'fish': 388, 'no': 754, 'make': 676, 'away': 44, 'tell': 1130, 'want': 1253, 'should': 980, 'about': 4, 'when': 1271, 'now': 769, 'fear': 368, 'my': 735, 'bad': 47, 'game': 437, 'call': 172, 'up': 1226, 'put': 869, 'down': 299, 'this': 1151, 'fall': 358, 'hold': 543, 'high': 532, 'as': 39, 'stand': 1064, 'book': 122, 'hand': 496, 'cup': 260, 'look': 659, 'cake': 171, 'top': 1191, 'books': 123, 'litte': 649, 'toy': 1200, 'ship': 973, 'milk': 702, 'dish': 284, 'hop': 550, 'oh': 775, 'these': 1144, 'rake': 885, 'man': 680, 'tail': 1117, 'red': 897, 'fan': 362, 'fell': 372, 'his': 538, 'head': 514, 'came': 175, 'from': 429, 'things': 1149, 'into': 582, 'pot': 853, 'lit': 648, 'sank': 937, 'deep': 275, 'shook': 979, 'bent': 85, 'get': 444, 'another': 27, 'ran': 887, 'fast': 364, 'back': 46, 'big': 93, 'wood': 1308, 'shut': 987, 'hook': 548, 'trick': 1206, 'take': 1118, 'got': 462, 'tip': 1175, 'bow': 131, 'pick': 823, 'thing': 1148, 'bite': 103, 'shake': 964, 'hands': 497, 'their': 1140, 'those': 1153, 'gave': 442, 'pat': 807, 'tame': 1125, 'come': 233, 'give': 448, 'fly': 405, 'kites': 611, 'hit': 540, 'run': 925, 'hall': 494, 'wall': 1251, 'thump': 1161, 'string': 1096, 'kite': 610, 'gown': 463, 'her': 525, 'dots': 297, 'pink': 829, 'white': 1277, 'bed': 67, 'bumps': 159, 'jumps': 596, 'kicks': 605, 'hops': 551, 'thumps': 1162, 'kinds': 609, 'way': 1260, 'she': 969, 'home': 545, 'hear': 517, 'find': 380, 'near': 738, 'think': 1150, 'rid': 903, 'after': 7, 'net': 745, 'bet': 87, 'yet': 1327, 'plop': 841, 'last': 625, 'thoe': 1152, 'stop': 1086, 'pack': 793, 'dear': 273, 'shame': 967, 'sad': 929, 'kind': 607, 'has': 505, 'gone': 457, 'yes': 1326, 'mess': 697, 'tall': 1124, 'ca': 168, 'who': 1279, 'always': 22, 'playthings': 838, 'were': 1266, 'picked': 824, 'strings': 1097, 'any': 29, 'well': 1264, 'asked': 41, 'socks': 1037, 'knox': 616, 'chicks': 203, 'bricks': 143, 'blocks': 112, 'clocks': 224, 'sir': 999, 'mr': 727, 'first': 387, 'll': 653, 'quick': 875, 'brick': 142, 'stack': 1063, 'block': 111, 'chick': 202, 'clock': 223, 'ticks': 1165, 'tocks': 1180, 'tick': 1164, 'tock': 1179, 'six': 1003, 'sick': 988, 'please': 840, 'don': 293, 'tongue': 1187, 'isn': 585, 'slick': 1011, 'mixed': 709, 'sorry': 1048, 'an': 25, 'easy': 322, 'whose': 1283, 'sue': 1105, 'sews': 963, 'sees': 959, 'sew': 962, 'comes': 234, 'crow': 255, 'slow': 1014, 'joe': 590, 'clothes': 226, 'rose': 920, 'hose': 554, 'nose': 766, 'goes': 454, 'grows': 482, 'hate': 508, 'makes': 678, 'quite': 879, 'lame': 622, 'blue': 117, 'goo': 458, 'gooey': 460, 'gluey': 451, 'chewy': 201, 'chewing': 200, 'goose': 461, 'doing': 292, 'choose': 209, 'chew': 199, 'won': 1305, 'very': 1237, 'bim': 97, 'ben': 82, 'brings': 146, 'broom': 148, 'bends': 83, 'breaks': 140, 'band': 51, 'bands': 52, 'pig': 825, 'lead': 630, 'brooms': 149, 'bangs': 54, 'booms': 125, 'boom': 124, 'poor': 849, 'mouth': 724, 'much': 730, 'bring': 145, 'luke': 669, 'luck': 668, 'likes': 647, 'lakes': 621, 'duck': 309, 'licks': 637, 'takes': 1119, 'blab': 105, 'such': 1103, 'blibber': 110, 'blubber': 116, 'rubber': 923, 'dumb': 311, 'through': 1160, 'three': 1158, 'cheese': 198, 'trees': 1205, 'free': 420, 'fleas': 395, 'flew': 396, 'while': 1276, 'freezy': 422, 'breeze': 141, 'blew': 109, 'freeze': 421, 'sneeze': 1033, 'enough': 340, 'silly': 991, 'stuff': 1100, 'talk': 1121, 'tweetle': 1218, 'beetles': 72, 'fight': 377, 'called': 173, 'beetle': 71, 'battle': 59, 'puddle': 866, 'paddles': 798, 'paddle': 796, 'bottle': 127, 'muddle': 731, 'battles': 60, 'poodle': 846, 'eating': 324, 'noodles': 761, 'noodle': 760, 'wait': 1244, 'minute': 706, 'where': 1272, 'bottled': 128, 'paddled': 797, 'muddled': 732, 'duddled': 310, 'fuddled': 432, 'wuddled': 1319, 'done': 294, 'every': 346, 'whoville': 1284, 'liked': 645, 'christmas': 210, 'grinch': 473, 'lived': 652, 'just': 598, 'north': 765, 'hated': 509, 'whole': 1280, 'season': 952, 'ask': 40, 'knows': 615, 'reason': 896, 'wasn': 1256, 'screwed': 950, 'right': 905, 'perhaps': 817, 'shoes': 978, 'tight': 1168, 'most': 716, 'likely': 646, 'been': 69, 'heart': 519, 'sizes': 1005, 'small': 1019, 'whatever': 1270, 'stood': 1085, 'eve': 343, 'hating': 510, 'whos': 1282, 'staring': 1068, 'cave': 189, 'sour': 1053, 'grinchy': 475, 'frown': 430, 'warm': 1254, 'lighted': 643, 'windows': 1295, 'below': 81, 'town': 1198, 'knew': 612, 'beneath': 84, 'busy': 164, 'hanging': 499, 'mistletoe': 707, 'wreath': 1318, 're': 892, 'stockings': 1082, 'snarled': 1027, 'sneer': 1031, 'tomorrow': 1186, 'practically': 856, 'growled': 481, 'fingers': 384, 'nervously': 744, 'drumming': 307, 'must': 734, 'coming': 235, 'girls': 447, 'boys': 134, 'wake': 1246, 'bright': 144, 'early': 318, 'rush': 926, 'toys': 1201, 'noise': 755, 'young': 1337, 'old': 777, 'feast': 369, 'pudding': 865, 'rare': 889, 'roast': 912, 'beast': 64, 'which': 1275, 'couldn': 243, 'least': 632, 'close': 225, 'together': 1183, 'bells': 80, 'ringing': 907, 'start': 1069, 'singing': 997, 'sing': 996, 'more': 714, 'thought': 1155, 'fifty': 376, 'years': 1322, 've': 1236, 'idea': 574, 'awful': 45, 'wonderful': 1306, 'laughed': 627, 'throat': 1159, 'santy': 939, 'claus': 216, 'coat': 230, 'chuckled': 211, 'clucked': 229, 'great': 467, 'saint': 933, 'nick': 750, 'need': 742, 'reindeer': 898, 'around': 38, 'since': 994, 'scarce': 945, 'none': 757, 'found': 417, 'simply': 993, 'instead': 581, 'dog': 290, 'max': 687, 'took': 1189, 'thread': 1157, 'tied': 1167, 'horn': 552, 'loaded': 655, 'bags': 48, 'empty': 335, 'sacks': 928, 'ramshackle': 886, 'sleigh': 1010, 'hitched': 541, 'giddap': 446, 'started': 1070, 'toward': 1195, 'homes': 546, 'lay': 629, 'asnooze': 42, 'quiet': 877, 'snow': 1034, 'filled': 378, 'air': 12, 'dreaming': 301, 'sweet': 1112, 'dreams': 302, 'without': 1304, 'care': 180, 'square': 1062, 'number': 771, 'hissed': 539, 'climbed': 222, 'roof': 916, 'fist': 389, 'slid': 1012, 'chimney': 206, 'rather': 890, 'pinch': 828, 'santa': 938, 'stuck': 1099, 'only': 781, 'once': 779, 'moment': 710, 'fireplace': 386, 'flue': 403, 'hung': 570, 'row': 922, 'grinned': 477, 'slithered': 1013, 'slunk': 1017, 'smile': 1023, 'unpleasant': 1225, 'room': 917, 'present': 857, 'pop': 850, 'guns': 485, 'bicycles': 92, 'roller': 915, 'skates': 1006, 'drums': 308, 'checkerboards': 197, 'tricycles': 1208, 'popcorn': 851, 'plums': 843, 'stuffed': 1101, 'nimbly': 752, 'by': 167, 'icebox': 573, 'cleaned': 218, 'flash': 394, 'even': 344, 'hash': 506, 'food': 408, 'glee': 450, 'grabbed': 466, 'shove': 985, 'heard': 518, 'sound': 1051, 'coo': 239, 'dove': 298, 'turned': 1215, 'cindy': 213, 'lou': 664, 'than': 1135, 'caught': 187, 'tiny': 1174, 'daughter': 268, 'water': 1258, 'stared': 1067, 'taking': 1120, 'smart': 1021, 'lie': 638, 'tot': 1194, 'fake': 357, 'lied': 639, 'light': 642, 'side': 989, 'workshop': 1312, 'fix': 391, 'fib': 374, 'fooled': 410, 'child': 204, 'patted': 810, 'drink': 303, 'sent': 960, 'log': 656, 'fire': 385, 'himself': 537, 'liar': 636, 'walls': 1252, 'left': 634, 'hooks': 549, 'wire': 1301, 'speck': 1056, 'crumb': 256, 'same': 936, 'other': 787, 'houses': 559, 'leaving': 633, 'crumbs': 257, 'mouses': 723, 'quarter': 873, 'past': 806, 'dawn': 269, 'still': 1081, 'packed': 795, 'sled': 1008, 'presents': 858, 'ribbons': 902, 'wrappings': 1317, 'tags': 1116, 'tinsel': 1173, 'trimmings': 1209, 'trappings': 1203, 'thousand': 1156, 'feet': 371, 'mt': 729, 'crumpit': 258, 'rode': 914, 'load': 654, 'tiptop': 1176, 'dump': 312, 'pooh': 847, 'grinchishly': 474, 'humming': 565, 'finding': 381, 'waking': 1247, 'mouths': 725, 'hang': 498, 'open': 784, 'cry': 259, 'boo': 121, 'hoo': 547, 'paused': 811, 'ear': 317, 'rising': 908, 'over': 790, 'low': 667, 'grow': 480, 'sounded': 1052, 'merry': 696, 'popped': 852, 'eyes': 352, 'shocking': 976, 'surprise': 1111, 'hadn': 489, 'stopped': 1087, 'somehow': 1040, 'ice': 572, 'puzzling': 872, 'packages': 794, 'boxes': 133, 'puzzled': 870, 'hours': 557, 'till': 1169, 'puzzler': 871, 'sore': 1047, 'before': 74, 'maybe': 689, 'doesn': 289, 'store': 1088, 'means': 693, 'happened': 501, 'grew': 472, 'didn': 280, 'feel': 370, 'whizzed': 1278, 'morning': 715, 'brought': 152, 'carved': 183, 'pup': 868, 'off': 774, 'night': 751, 'jim': 588, 'bee': 68, 'ned': 741, 'ted': 1128, 'ed': 325, 'bat': 57, 'dad': 263, 'song': 1045, 'long': 658, 'walk': 1248, 'brown': 153, 'mrs': 728, 'upside': 1230, 'black': 106, 'snack': 1025, 'jumped': 595, 'bumped': 158, 'tent': 1132, 'dogs': 291, 'help': 523, 'yelp': 1325, 'hill': 534, 'father': 366, 'sister': 1000, 'brother': 150, 'brothers': 151, 'read': 893, 'words': 1309, 'constantinople': 238, 'timbuktu': 1170, 'does': 288, 'seehemewe': 954, 'patpuppop': 809, 'hethreetreebee': 527, 'tophopstop': 1192, 'today': 1181, 'fifteenth': 375, 'jungle': 597, 'nool': 763, 'heat': 520, 'cool': 241, 'pool': 848, 'splashing': 1058, 'enjoying': 339, 'joys': 592, 'horton': 553, 'elephant': 331, 'towards': 1196, 'again': 9, 'faint': 355, 'person': 818, 'calling': 174, 'dust': 314, 'blowing': 115, 'though': 1154, 'murmured': 733, 'never': 746, 'able': 3, 'yell': 1323, 'someone': 1041, 'sort': 1049, 'creature': 251, 'size': 1004, 'seen': 958, 'shaking': 965, 'blow': 114, 'steer': 1076, 'save': 941, 'because': 66, 'matter': 685, 'gently': 443, 'using': 1233, 'greatest': 469, 'stretched': 1095, 'trunk': 1212, 'lifted': 641, 'carried': 181, 'placed': 832, 'safe': 931, 'soft': 1038, 'clover': 227, 'humpf': 567, 'humpfed': 568, 'voice': 1242, 'twas': 1217, 'kangaroo': 599, 'pouch': 855, 'pin': 827, 'believe': 77, 'sincerely': 995, 'ears': 319, 'keen': 601, 'clearly': 221, 'four': 418, 'family': 360, 'children': 205, 'starting': 1071, 'favour': 367, 'disturb': 286, 'fool': 409, 'biggest': 95, 'blame': 107, 'kangaroos': 600, 'plunged': 844, 'terrible': 1133, 'frowned': 431, 'persons': 819, 'drowned': 306, 'protect': 861, 'bigger': 94, 'plucked': 842, 'hustled': 571, 'tops': 1193, 'news': 748, 'quickly': 876, 'spread': 1061, 'talks': 1123, 'flower': 402, 'walked': 1249, 'worrying': 1315, 'almost': 18, 'hour': 556, 'alarm': 13, 'harm': 504, 'walking': 1250, 'talking': 1122, 'barely': 56, 'speak': 1055, 'friend': 425, 'fine': 382, 'helped': 524, 'folks': 407, 'end': 336, 'saved': 942, 'ceilings': 190, 'floors': 401, 'churches': 212, 'grocery': 479, 'stores': 1089, 'mean': 692, 'gasped': 441, 'buildings': 156, 'piped': 830, 'certainly': 191, 'mayor': 690, 'friendly': 426, 'clean': 217, 'seem': 956, 'terribly': 1134, 'aren': 36, 'wonderfully': 1307, 'ville': 1239, 'thankful': 1137, 'greatful': 470, 'worry': 1314, 'spoke': 1059, 'monkeys': 711, 'neck': 739, 'wickersham': 1286, 'shouting': 984, 'rot': 921, 'elephants': 332, 'going': 455, 'nonsense': 758, 'snatched': 1028, 'bottomed': 129, 'eagle': 316, 'named': 737, 'valad': 1234, 'vlad': 1241, 'koff': 617, 'mighty': 699, 'strong': 1098, 'swift': 1113, 'wing': 1296, 'kindly': 608, 'beak': 63, 'late': 626, 'afternoon': 8, 'far': 363, 'bird': 99, 'flapped': 392, 'wings': 1297, 'flight': 398, 'chased': 195, 'groans': 478, 'stones': 1084, 'tattered': 1126, 'toenails': 1182, 'battered': 58, 'bones': 120, 'begged': 75, 'live': 651, 'folk': 406, 'beyond': 90, 'kept': 602, 'flapping': 393, 'shoulder': 981, 'quit': 878, 'yapping': 1321, 'hide': 531, '56': 1, 'next': 749, 'sure': 1109, 'place': 831, 'hid': 529, 'drop': 304, 'somewhere': 1044, 'inside': 579, 'patch': 808, 'clovers': 228, 'hundred': 569, 'miles': 701, 'wide': 1288, 'sneered': 1032, 'fail': 354, 'flip': 399, 'cried': 253, 'bust': 163, 'shall': 966, 'friends': 427, 'searched': 951, 'sought': 1050, 'noon': 764, 'dead': 272, 'alive': 15, 'piled': 826, 'nine': 753, 'five': 390, 'millionth': 703, 'really': 895, 'trouble': 1210, 'share': 968, 'birdie': 100, 'dropped': 305, 'landed': 623, 'hard': 503, 'tea': 1127, 'pots': 854, 'broken': 147, 'rocking': 913, 'chairs': 192, 'smashed': 1022, 'bicycle': 91, 'tires': 1177, 'crashed': 250, 'pleaded': 839, 'stick': 1079, 'making': 679, 'repairs': 900, 'course': 246, 'answered': 28, 'thin': 1147, 'thick': 1146, 'days': 271, 'wild': 1291, 'insisted': 580, 'chatting': 196, 'existed': 350, 'carryings': 182, 'peaceable': 812, 'bellowing': 79, 'bungle': 160, 'state': 1072, 'snapped': 1026, 'nonsensical': 759, 'dozens': 300, 'uncles': 1223, 'wickershams': 1287, 'cousins': 247, 'laws': 628, 'engaged': 338, 'roped': 919, 'caged': 170, 'hah': 490, 'boil': 119, 'hot': 555, 'steaming': 1075, 'kettle': 603, 'beezle': 73, 'nut': 772, 'oil': 776, 'full': 433, 'prove': 862, 'meeting': 695, 'everyone': 347, 'holler': 544, 'shout': 982, 'scream': 949, 'stew': 1078, 'scared': 947, 'people': 814, 'loudly': 666, 'smiled': 1024, 'clear': 219, 'bell': 78, 'surely': 1110, 'wind': 1294, 'distant': 285, 'voices': 1243, 'either': 329, 'neither': 743, 'grab': 465, 'shouted': 983, 'cage': 169, 'dope': 296, 'lasso': 624, 'stomach': 1083, 'ten': 1131, 'rope': 918, 'tie': 1166, 'knots': 613, 'lose': 661, 'dunk': 313, 'juice': 593, 'fought': 415, 'vigor': 1238, 'vim': 1240, 'gang': 439, 'many': 682, 'beat': 65, 'mauled': 686, 'haul': 511, 'managed': 681, 'die': 281, 'yourselves': 1340, 'tom': 1185, 'smack': 1018, 'whooped': 1281, 'racked': 882, 'rattled': 891, 'kettles': 604, 'brass': 137, 'pans': 802, 'garbage': 440, 'pail': 800, 'cranberry': 249, 'cans': 178, 'bazooka': 61, 'blasted': 108, 'toots': 1190, 'clarinets': 214, 'oom': 783, 'pahs': 799, 'flutes': 404, 'gusts': 486, 'loud': 665, 'racket': 883, 'rang': 888, 'sky': 1007, 'howling': 562, 'mad': 672, 'hullabaloo': 564, 'hey': 528, 'hows': 563, 'mine': 705, 'best': 86, 'working': 1311, 'anyone': 30, 'shirking': 975, 'rushed': 927, 'east': 321, 'west': 1267, 'seemed': 957, 'yipping': 1331, 'beeping': 70, 'bipping': 98, 'ruckus': 924, 'roar': 911, 'raced': 881, 'each': 315, 'building': 155, 'floor': 400, 'felt': 373, 'getting': 445, 'nowhere': 770, 'despair': 277, 'suddenly': 1104, 'burst': 161, 'door': 295, 'discovered': 283, 'shirker': 974, 'hidden': 530, 'fairfax': 356, 'apartments': 34, 'apartment': 33, '12': 0, 'jo': 589, 'standing': 1065, 'bouncing': 130, 'yo': 1332, 'yipp': 1330, 'chirp': 208, 'twerp': 1219, 'lad': 619, 'eiffelberg': 327, 'tower': 1197, 'towns': 1199, 'darkest': 267, 'time': 1171, 'blood': 113, 'aid': 11, 'country': 244, 'noises': 756, 'greater': 468, 'amounts': 24, 'counts': 245, 'thus': 1163, 'cleared': 220, 'yopp': 1335, 'extra': 351, 'finally': 379, 'proved': 863, 'world': 1313, 'smallest': 1020, 'true': 1211, 'planning': 835, 'summer': 1106, 'ish': 584, 'congratulations': 237, 'places': 833, 'brains': 135, 'yourself': 1339, 'direction': 282, 'own': 791, 'guy': 487, 'decide': 274, 'streets': 1094, 'em': 334, 'street': 1093, 'case': 184, 'straight': 1091, 'opener': 785, 'happen': 500, 'frequently': 423, 'brainy': 136, 'footsy': 412, 'along': 20, 'happening': 502, 'seeing': 955, 'sights': 990, 'join': 591, 'fliers': 397, 'soar': 1036, 'heights': 521, 'lag': 620, 'behind': 76, 'speed': 1057, 'pass': 805, 'soon': 1046, 'wherever': 1273, 'rest': 901, 'except': 349, 'sometimes': 1043, 'sadly': 930, 'bang': 53, 'ups': 1229, 'prickle': 859, 'ly': 671, 'perch': 816, 'lurch': 670, 'chances': 194, 'slump': 1015, 'un': 1221, 'slumping': 1016, 'easily': 320, 'marked': 683, 'mostly': 717, 'darked': 266, 'sprain': 1060, 'both': 126, 'elbow': 330, 'chin': 207, 'dare': 264, 'stay': 1073, 'win': 1293, 'turn': 1214, 'quarters': 874, 'sneak': 1029, 'simple': 992, 'afraid': 6, 'maker': 677, 'upper': 1228, 'confused': 236, 'race': 880, 'wiggled': 1290, 'roads': 910, 'break': 139, 'necking': 740, 'pace': 792, 'grind': 476, 'cross': 254, 'weirdish': 1263, 'space': 1054, 'headed': 515, 'useless': 1232, 'waiting': 1245, 'bus': 162, 'plane': 834, 'mail': 675, 'phone': 822, 'ring': 906, 'hair': 491, 'friday': 424, 'uncle': 1222, 'jake': 587, 'better': 88, 'pearls': 813, 'pair': 801, 'pants': 803, 'wig': 1289, 'curls': 261, 'chance': 193, 'escape': 341, 'staying': 1074, 'playing': 837, 'banner': 55, 'ride': 904, 'ready': 894, 'anything': 31, 'under': 1224, 'points': 845, 'scored': 948, 'magical': 674, 'winning': 1300, 'est': 342, 'winner': 1299, 'fame': 359, 'famous': 361, 'watching': 1257, 'tv': 1216, 'times': 1172, 'lonely': 657, 'cause': 188, 'against': 10, 'alone': 19, 'whether': 1274, 'meet': 694, 'scare': 946, 'road': 909, 'between': 89, 'hither': 542, 'yon': 1333, 'weather': 1262, 'foul': 416, 'enemies': 337, 'prowl': 864, 'hakken': 493, 'kraks': 618, 'howl': 561, 'onward': 782, 'frightening': 428, 'creek': 252, 'arms': 37, 'sneakers': 1030, 'leak': 631, 'hike': 533, 'face': 353, 'problems': 860, 'already': 21, 'strange': 1092, 'birds': 101, 'tact': 1115, 'remember': 899, 'life': 640, 'balancing': 49, 'act': 5, 'forget': 414, 'dexterous': 278, 'deft': 276, 'mix': 708, 'foot': 411, 'succeed': 1102, 'indeed': 577, '98': 2, 'percent': 815, 'guaranteed': 483, 'kid': 606, 'move': 726, 'mountains': 721, 'name': 736, 'buxbaum': 166, 'bixby': 104, 'bray': 138, 'mordecai': 713, 'ali': 14, 'van': 1235, 'allen': 17, 'shea': 970, 'mountain': 720, 'star': 1066, 'glad': 449, 'fat': 365, 'yellow': 1324, 'everywhere': 348, 'seven': 961, 'eight': 328, 'eleven': 333, 'ever': 345, 'wump': 1320, 'hump': 566, 'gump': 484, 'pull': 867, 'sticks': 1080, 'bike': 96, 'mike': 700, 'sits': 1002, 'work': 1310, 'hills': 535, 'hello': 522, 'cow': 248, 'cannot': 177, 'teeth': 1129, 'gold': 456, 'shoe': 977, 'story': 1090, 'told': 1184, 'nook': 762, 'cook': 240, 'moon': 712, 'sheep': 971, 'sleep': 1009, 'zans': 1341, 'gox': 464, 'ying': 1328, 'sings': 998, 'yink': 1329, 'wink': 1298, 'ink': 578, 'yop': 1334, 'finger': 383, 'brush': 154, 'comb': 232, 'pet': 820, 'met': 698, 'cats': 186, 'cut': 262, 'pets': 821, 'zeds': 1342, 'upon': 1227, 'heads': 516, 'haircut': 492, 'wave': 1259, 'swish': 1114, 'gack': 436, 'park': 804, 'clark': 215, 'zeep': 1343}Specific Words

Text Normalizing

What’s wrong with the way we counted words originally?

Counter({'UP': 1, 'PUP': 3, 'Pup': 4, 'is': 10, 'up.': 2, ...})It’s usually good to normalize for punctuation and capitalization.

Normalization options are specified when you initialize the

CountVectorizer().By default,

scikit-learnstrips punctuation and converts all characters to lowercase.

N-grams

The Shortcomings of Bag-of-Words

Bag-of-words is easy to understand and easy to implement. What are its disadvantages?

Consider the following documents:

“The dog bit her owner.”

“Her dog bit the owner.”

Both documents have the same exact bag-of-words representation, but they mean something quite different!

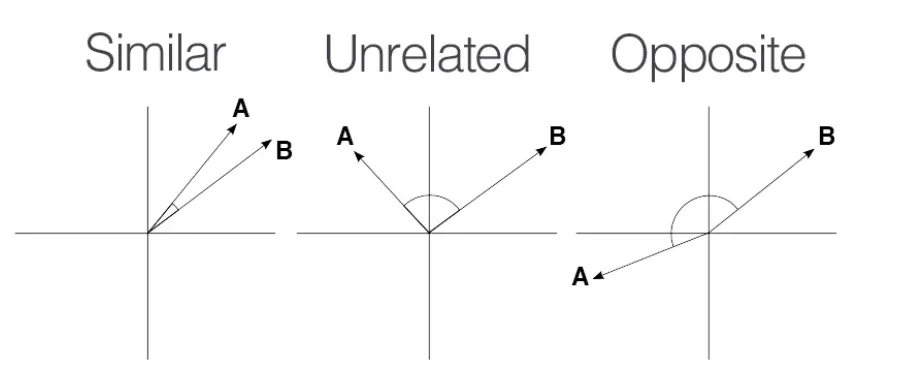

N-grams

An n-gram is a sequence of \(n\) words.

N-grams allow us to capture more of the meaning.