is_100m = df_bolt["Event"] == "2008 Olympics 100m"

df_100m = df_bolt[is_100m]

one_mean = df_100m["Time"].mean()

one_std = df_100m["Time"].std()

df_bolt["Standardized_Time"] = 0.0

df_bolt.loc[is_100m, "Standardized_Time"] = (df_bolt.loc[is_100m, "Time"] - Distances Between Observations

More words about ChatGPT…

What is my job?

Teaching you stuff

(Thoughtfully) choosing what to teach and how to teach it.

Assessing what you’ve learned

What do you understand about the tools I’ve taught you?

This is not the same as assessing if you figured out a way to accomplish a given task.

Using the tools I teach

A nice clean, efficient approach

A loop is often not necessary

When to make a function?

def calculate_simpson_index(values, position):

# Convert values to a Pandas Series, ensuring they are strings

values_series = pd.Series(values).astype(str)

# Extract the specified character based on the position

extracted_character = values_series.str[position]

# Calculate the frequency of each character

character_counts = extracted_character.value_counts(normalize=True)

# Compute the Simpson's Index

simpson_index = 1 - sum(character_counts ** 2)

return simpson_indexThe story so far…

Summarizing

One categorical variable: marginal distribution

Two categorical variables: joint and conditional distributions

One quantitative variable: mean, median, variance, standard deviation.

One quantitative, one categorical: mean, median, and std dev across groups (

groupby(), split-apply-combine)Two quantitative variables: z-scores, correlation

Visualizing

One categorical variable: bar plot or column plot

Two categorical variables: stacked bar plot, side-by-side bar plot, or stacked percentage bar plot

One quantitative variable: histogram, density plot, or boxplot

One quantitative, one categorical: overlapping densities, side-by-side boxplots, or facetting

Two quantitative variables: scatterplot

Today’s data: House prices

Ames house prices

Order PID MS SubClass ... Sale Type Sale Condition SalePrice

0 1 526301100 20 ... WD Normal 215000

1 2 526350040 20 ... WD Normal 105000

2 3 526351010 20 ... WD Normal 172000

3 4 526353030 20 ... WD Normal 244000

4 5 527105010 60 ... WD Normal 189900

[5 rows x 82 columns]read_table not read_csv

This is a tsv file (tab separated values), so we need to use a different function to read in our data! The sep argument allows you to specify the delimiter the file uses, but you can also allow the system to autodetect the delimiter.

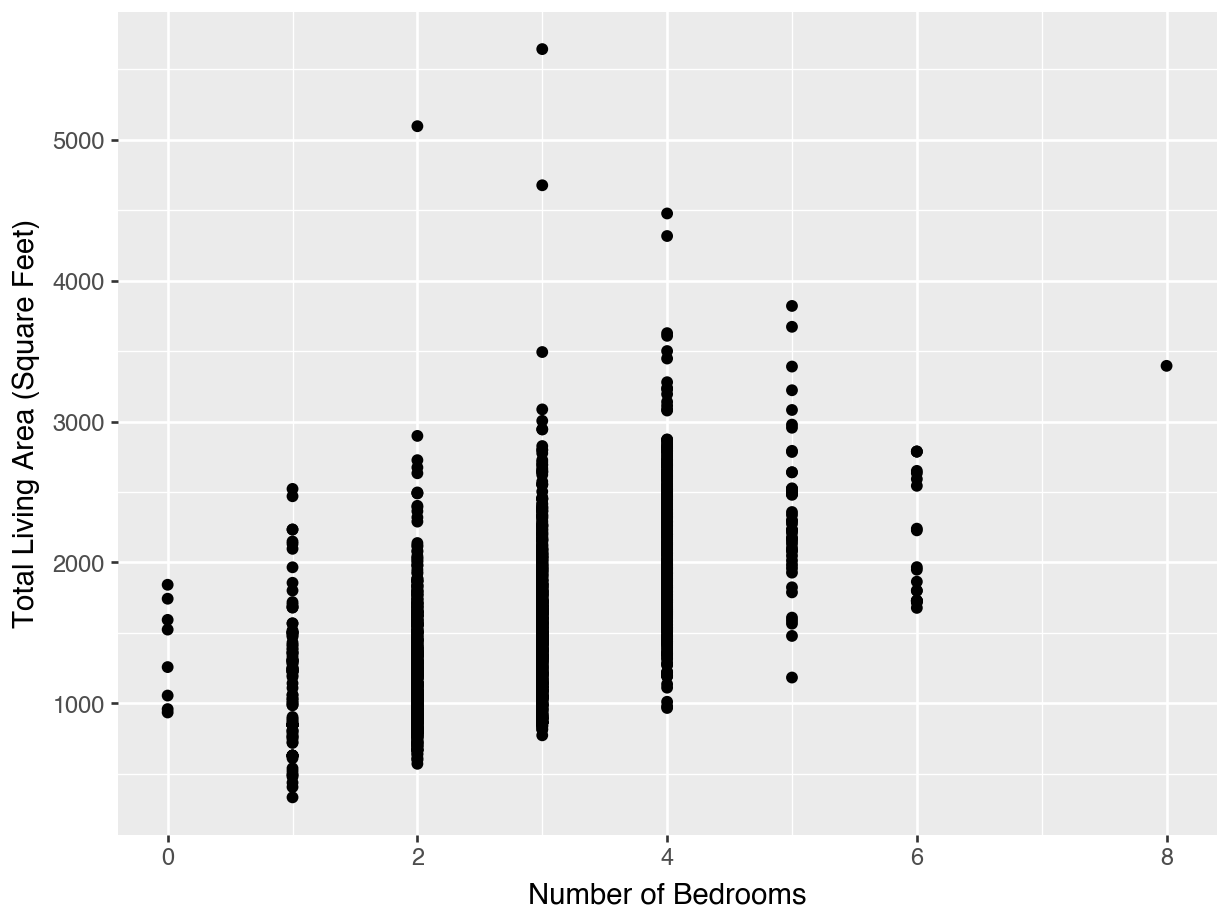

How does house size relate to number of bedrooms?

How does house size relate to number of bedrooms?

What statistic would you calculate?

Measuring Similarity with Distance

Similarity

How might we answer the question, “Are these two houses similar?”

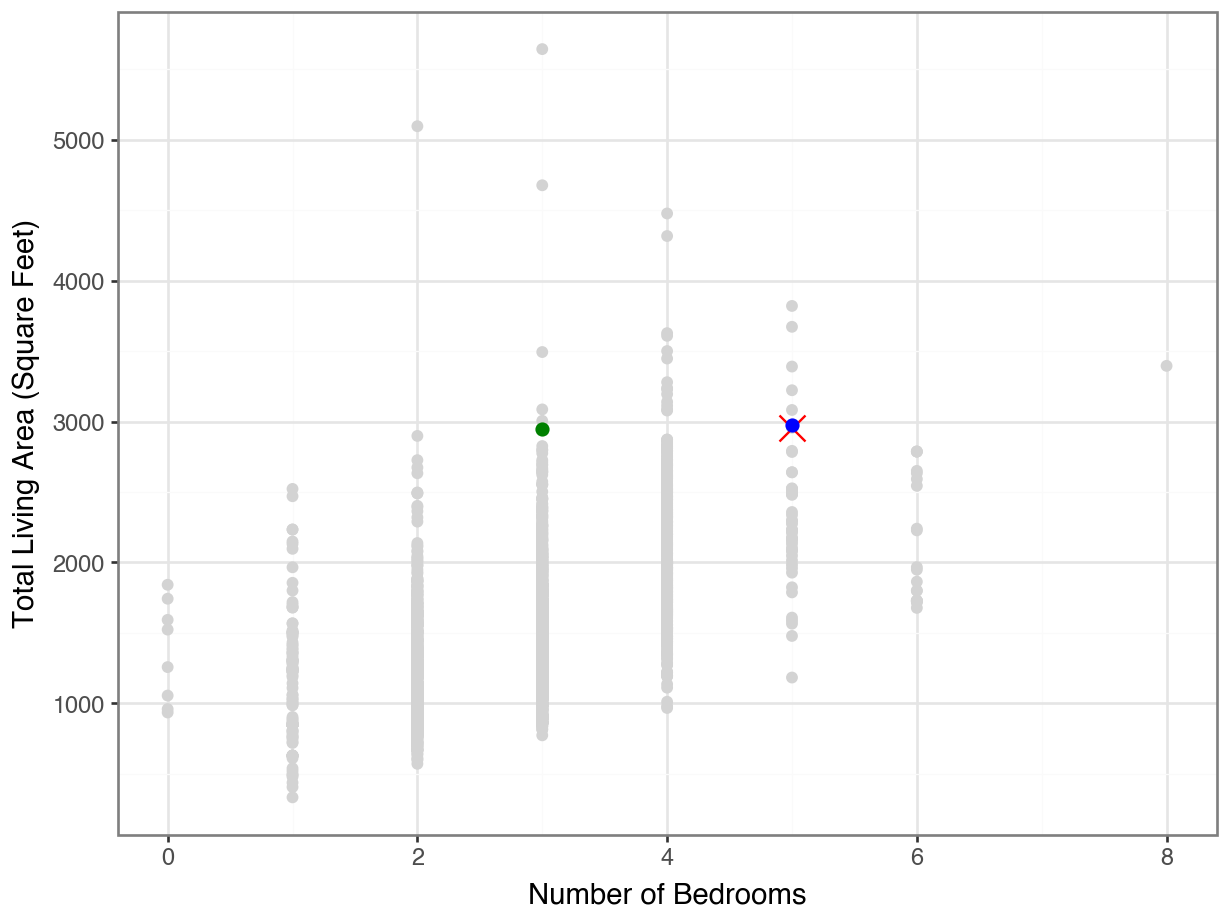

Distance

The distance between the two observations is:

\[ \sqrt{ (2956 - 2650)^2 + (5 - 6)^2} = 306 \]

… what does this number mean? Not much!

But we can use it to compare sets of houses and find houses that appear to be the most similar.

Another House to Consider

Thus, house 1707 is more similar to house 290 than to house 291.

Lecture Activity Part 1

Complete Part One of the activity linked in Canvas.

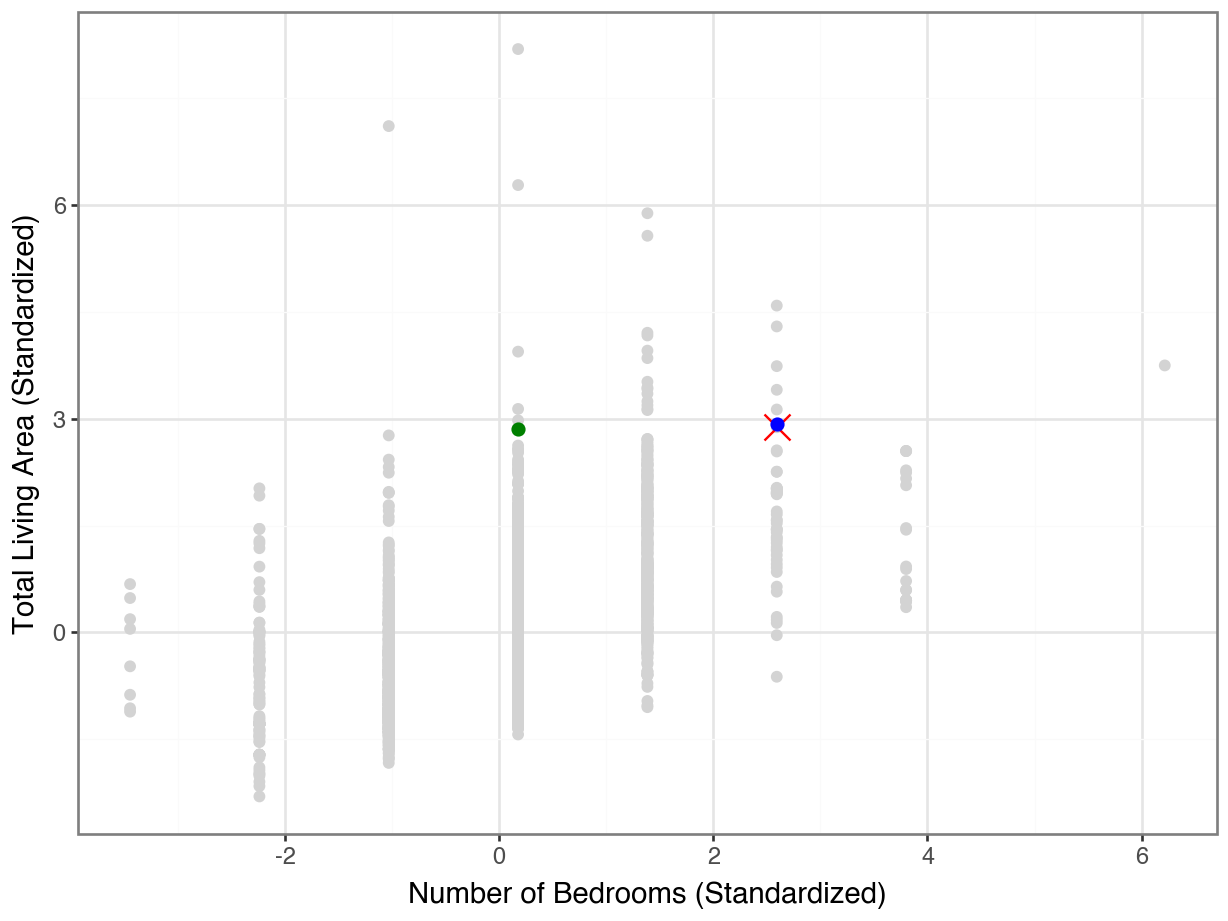

Scaling / Standardizing

House 160 seems more similar to 1707…

Code

(

ggplot(housing, mapping = aes(y = "Gr Liv Area", x = "Bedroom AbvGr")) +

geom_point(color = "lightgrey") +

geom_point(housing.loc[[1707]], color = "orange", size = 5, shape = "x") +

geom_point(housing.loc[[160]], color = "blue", size = 2) +

geom_point(housing.loc[[2336]], color = "green", size = 2) +

theme_bw() +

labs(y = "Total Living Area (Square Feet)",

x = "Number of Bedrooms")

)

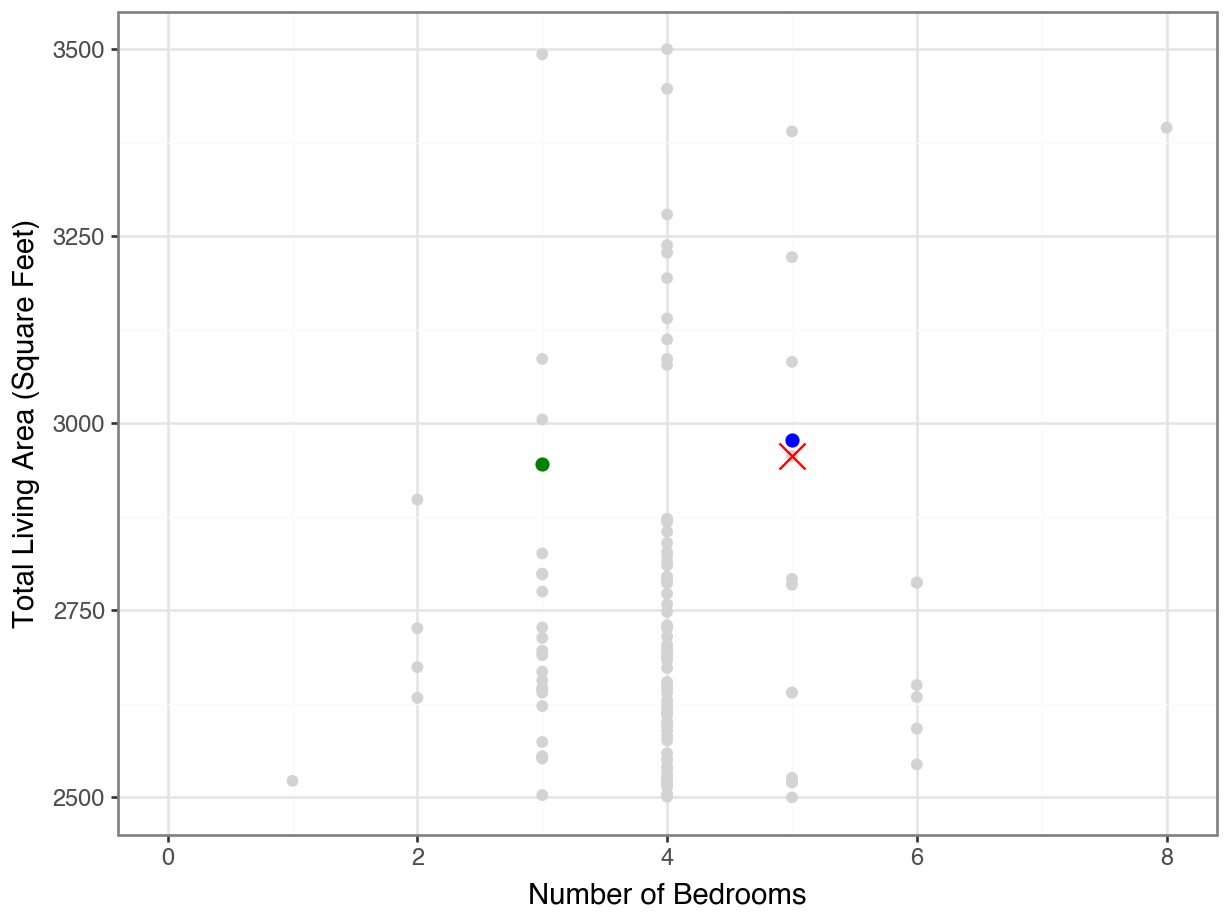

…even if we zoom in…

Code

(

ggplot(housing, mapping = aes(y = "Gr Liv Area", x = "Bedroom AbvGr")) +

geom_point(color = "lightgrey") +

geom_point(housing.loc[[1707]], color = "orange", size = 5, shape = "x") +

geom_point(housing.loc[[160]], color = "blue", size = 2) +

geom_point(housing.loc[[2336]], color = "green", size = 2) +

theme_bw() +

labs(y = "Total Living Area (Square Feet)",

x = "Number of Bedrooms") +

scale_y_continuous(limits = (2500, 3500))

)

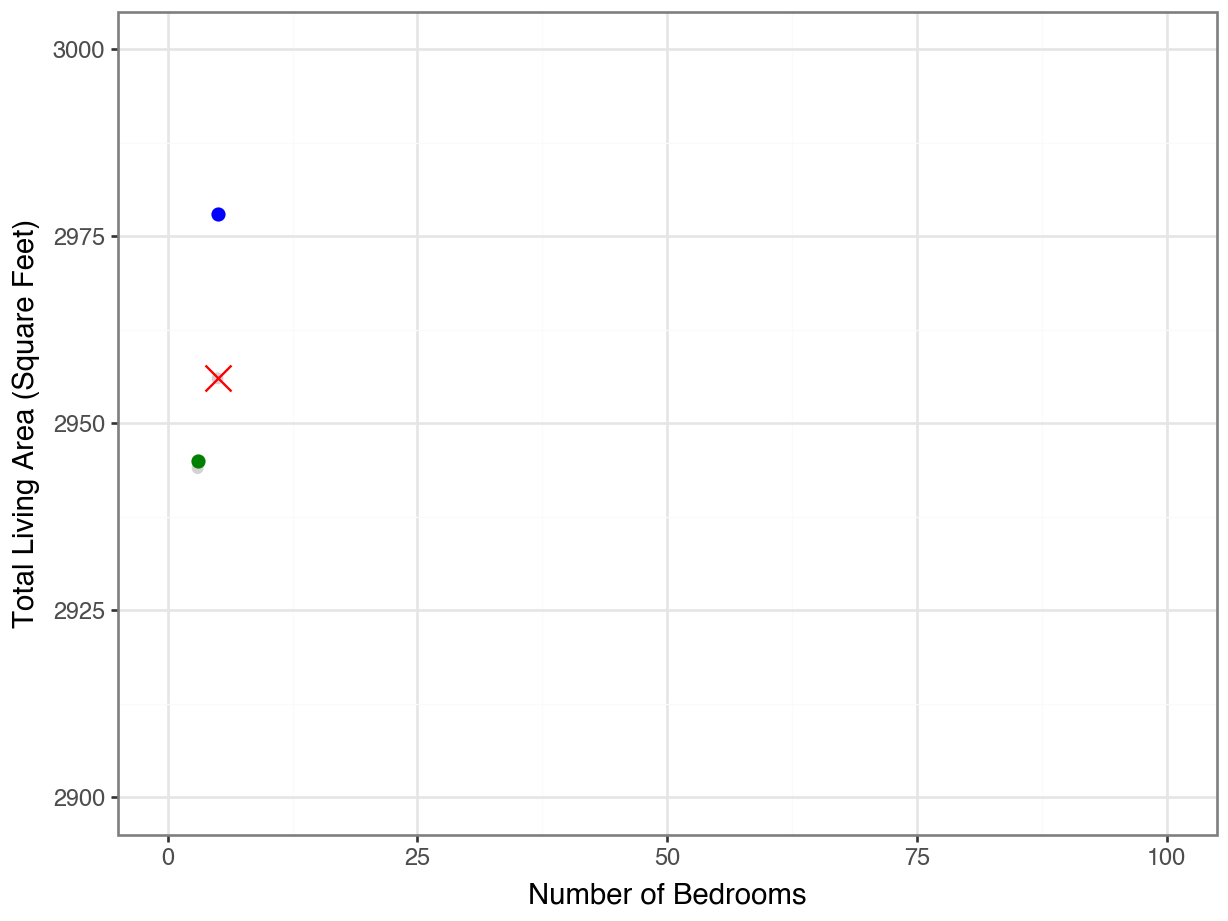

…but not if we put the axes on the same scale!

Code

(

ggplot(housing, aes(y = "Gr Liv Area", x = "Bedroom AbvGr")) +

geom_point(color = "lightgrey") +

geom_point(housing.loc[[1707]], color = "orange", size = 5, shape = "x") +

geom_point(housing.loc[[160]], color = "blue", size = 2) +

geom_point(housing.loc[[2336]], color = "green", size = 2) +

theme_bw() +

labs(y = "Total Living Area (Square Feet)",

x = "Number of Bedrooms") +

scale_y_continuous(limits = (2900, 3000)) +

scale_x_continuous(limits = (0, 100))

)

Scaling

We need to make sure our features are on the same scale before we can use distances to measure similarity.

Standardizing

subtract the mean, divide by the standard deviation

Scaling

Code

(

ggplot(housing, aes(y = "size_scaled", x = "bdrm_scaled")) +

geom_point(color = "lightgrey") +

geom_point(housing.loc[[1707]], color = "orange", size = 5, shape = "x") +

geom_point(housing.loc[[160]], color = "blue", size = 2) +

geom_point(housing.loc[[2336]], color = "green", size = 2) +

theme_bw() +

labs(y = "Total Living Area (Standardized)",

x = "Number of Bedrooms (Standardized)")

)

Lecture Activity Part 2

Complete Part Two of the activity linked in Canvas.

Scikit-learn

Scikit-learn

scikit-learnis a library for machine learning and modelingWe will use it a lot in this class!

For now, we will use it as a shortcut for scaling and for computing distances

The philosophy of

sklearnis:- specify your analysis

- fit on the data to prepare the analysis

- transform the data

Specify

StandardScaler()In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook.

On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

Parameters

No calculations have happened yet!

Fit

The scaler object “learns” the means and standard deviations.

StandardScaler()In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook.

On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

Parameters

array([1499.69044369, 2.85426621])array([505.4226158 , 0.82758988])We still have not altered the data at all!

Transform

sklearn, numpy, and pandas

By default,

sklearnfunctions returnnumpyobjects.This is sometimes annoying, maybe we want to plot things after scaling.

Solution: remake it, with the original column names.

Gr Liv Area Bedroom AbvGr

0 0.309265 0.176094

1 -1.194427 -1.032234

2 -0.337718 0.176094

3 1.207523 0.176094

4 0.255844 0.176094

... ... ...

2925 -0.982723 0.176094

2926 -1.182556 -1.032234

2927 -1.048015 0.176094

2928 -0.219006 -1.032234

2929 0.989884 0.176094

[2930 rows x 2 columns]Distances with sklearn

Finding the Most Similar

Lecture Activity Part 3

Complete Part Three of the activity linked in Canvas.

Alternatives

Other scaling

Standardization \[x_i \leftarrow \frac{x_i - \bar{X}}{\text{sd}(X)}\]

Min-Max Scaling \[x_i \leftarrow \frac{x_i - \text{min}(X)}{\text{max}(X) - \text{min}(X)}\]

Other distances

Euclidean (\(\ell_2\))

\[\sqrt{\sum_{j=1}^m (x_j - x'_j)^2}\]

Manhattan (\(\ell_1\))

\[\sum_{j=1}^m |x_j - x'_j|\]

Takeaways

Takeaways

We measure similarity between observations by calculating distances.

It is important that all our features be on the same scale for distances to be meaningful.

We can use

scikit-learnfunctions to fit and transform data, and to compute pairwise distances.There are many options of ways to scale data; most common is standardizing

There are many options of ways to measure distances; most common is Euclidean distance.